Dev Log

Upgrade System (Stats Part 2)

Upgrades! Who doesn’t like a good upgrade when enemies are getting tough and you need a little boost?

Implementing a system can be easy if it’s small. An upgrade system is no different. Need to boost the speed of a spaceship? No problem make a variable a bit bigger. But when the number of stats, the number of units, and the number of possible upgrades grows the need for a system becomes more and more obvious.

With 20, 50, or 100 different upgrades you can’t write a new class for each upgrade and have any realistic hope of maintaining that code base let alone debugging each and every upgrade.

So just like I did with my stats system I wanted to share how I created my upgrade system (which is closely tied to my stats system) in hopes that it might spark ideas for you to create your own upgrade system that matches the needs for your project.

Important Details

Just like my Stats system, I wanted my upgrade system to be based on scriptable objects - they provide asset-level data packages that allow for an easy workflow, and frankly I just like them. Just like with my stats system, I’ve continued to make use of Odin Inspector to allow the serialization of dictionaries and access to those dictionaries in the inspector. If you don’t have Odin Inspector, you can use the same workarounds from the stats system to use lists instead of dictionaries.

Base Class

I don’t always love using inheritance, but in this case, I’m using it as I have several varieties of upgrades in my project - including leader upgrades, global upgrades, and building unlock upgrades.

For this post, I’ll show the inheritance, but stay focused on implementing basic upgrades for altering unit stats. If you don’t need different types of upgrades, then I’d suggest you use a single non-abstract Upgrade class.

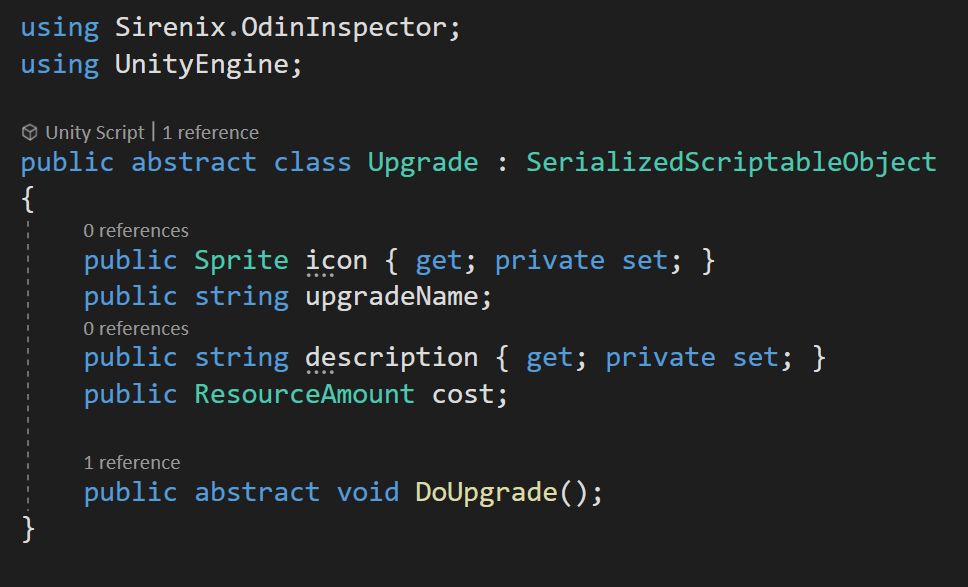

The base upgrade class is an abstract class because (for me) each type of upgrade will need to implement DoUpgrade slightly differently and I don’t want any instances of the base Upgrade class in the project. The class defines some basic properties such as a name, a description, cost, and an icon for the UI.

Stats Upgrade

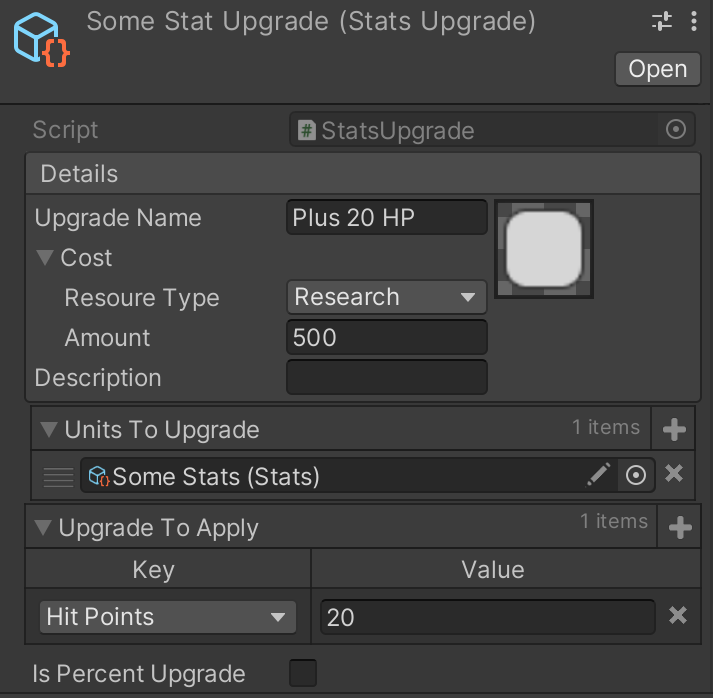

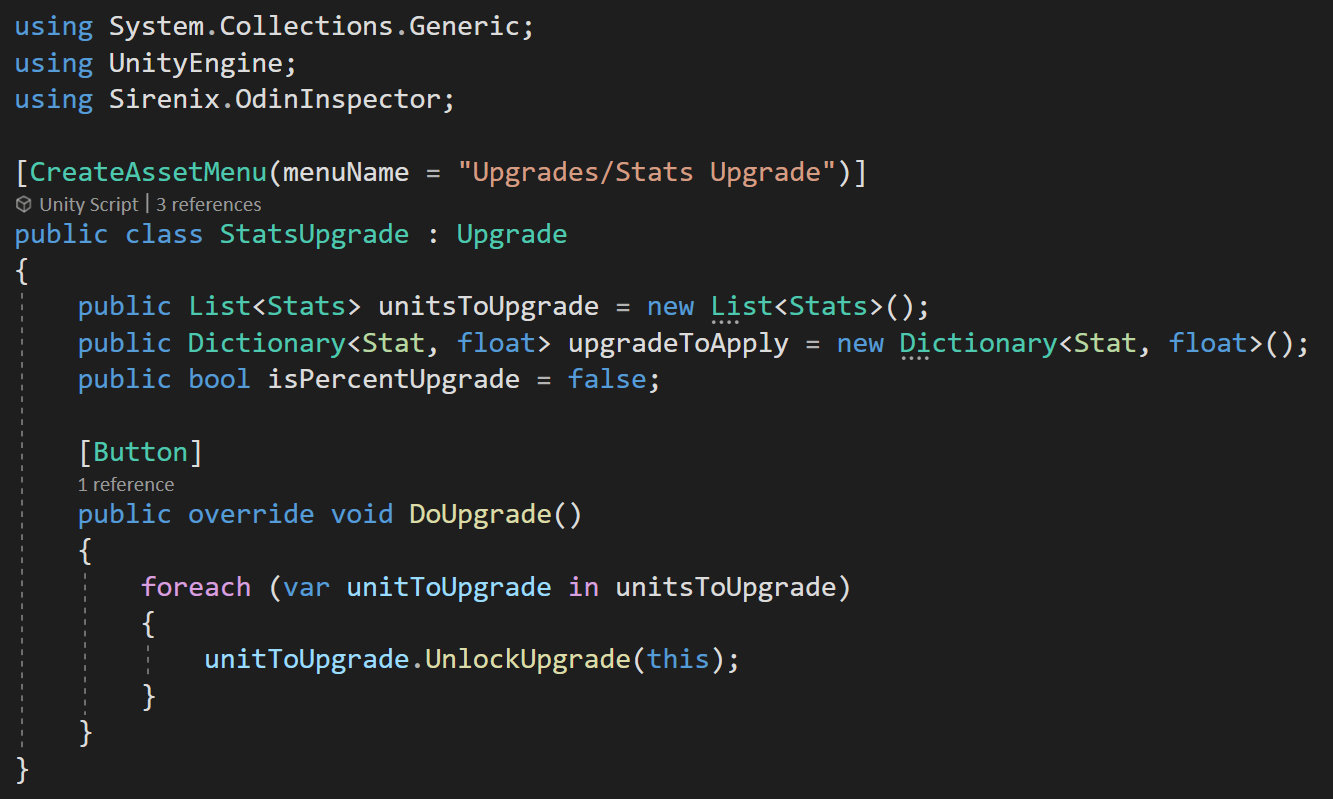

All the real functionality comes in the StatsUpgrade subclass. Here I define two important collections.

The first is a list of the units that this upgrade is to be applied to. Notice that it’s actually a list of stats objects (which are themselves scriptable objects) and not the prefabs of the unit objects. I simply drag in the stats scriptable object for any unit that I want to apply this upgrade to.

The second collection is a dictionary, but could easily be a list, of the individual stats that are affected by this upgrade and the amount that the stat is altered. Again, I’m manually adding items to define the upgrade.

Then the real functionality comes from the DoUgprade function, but even that is pretty simple. All that happens is we iterate through all the stats to upgrade and call UnlockUpgrade and pass in the upgrade itself.

That’s it. That’s all the stats upgrade does.

To make this useful, we need to make some modifications to the stats class that we built in the last post (or video) to handle the upgrades.

Modifying the Stats Class

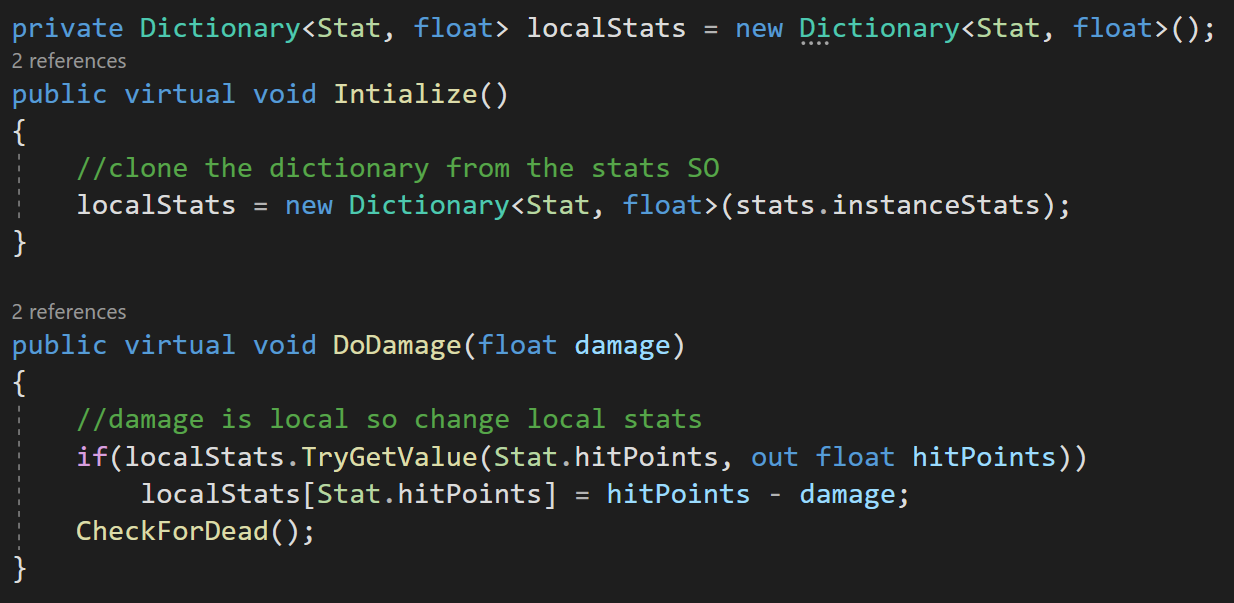

If you read the post or watched the video on my stats system you may also notice that I’ve implemented my suggestion of an “instance stats” dictionary to separate stats like “health” or “hit points” that will belong not to the type of object but to the instance of an object.

To work with the upgrade system the stats class needs a new list to track the upgrades and it’ll need a handful of new functions as well.

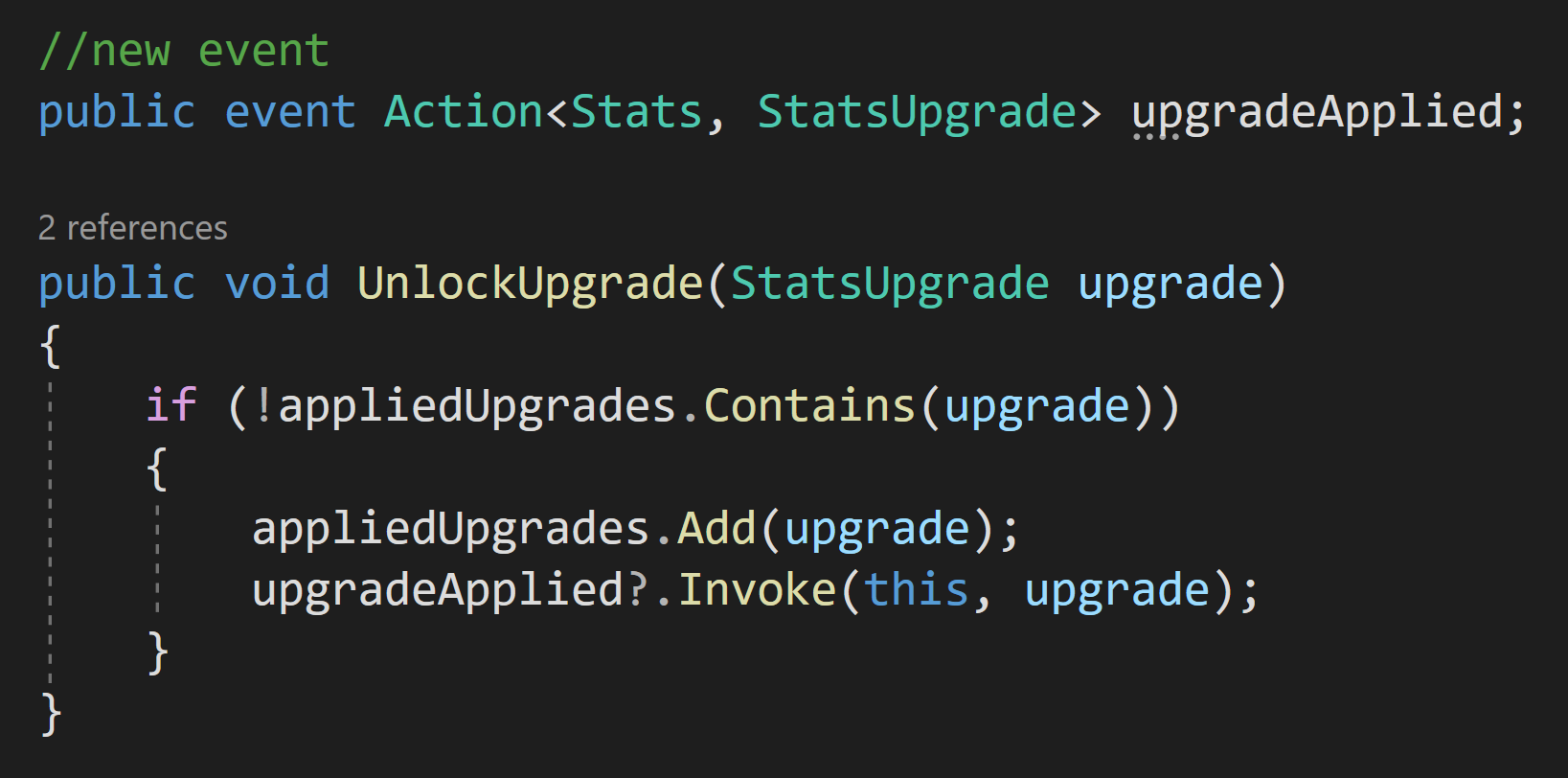

First, we need to define the UnlockUpgrade function that was called in the StatsUpgrade class. This function simply needs to check if the upgrade is already contained in the applied upgrade list and if not add it to the list.

Next, we need to modify our GetStats function to take into account any potential upgrades. To do this we first check if the desired stat is in either the instance stats dictionary or in the stats dictionary. If we find it in either dictionary we get the base value for that stat and pass it into the GetUpgradedValue function.

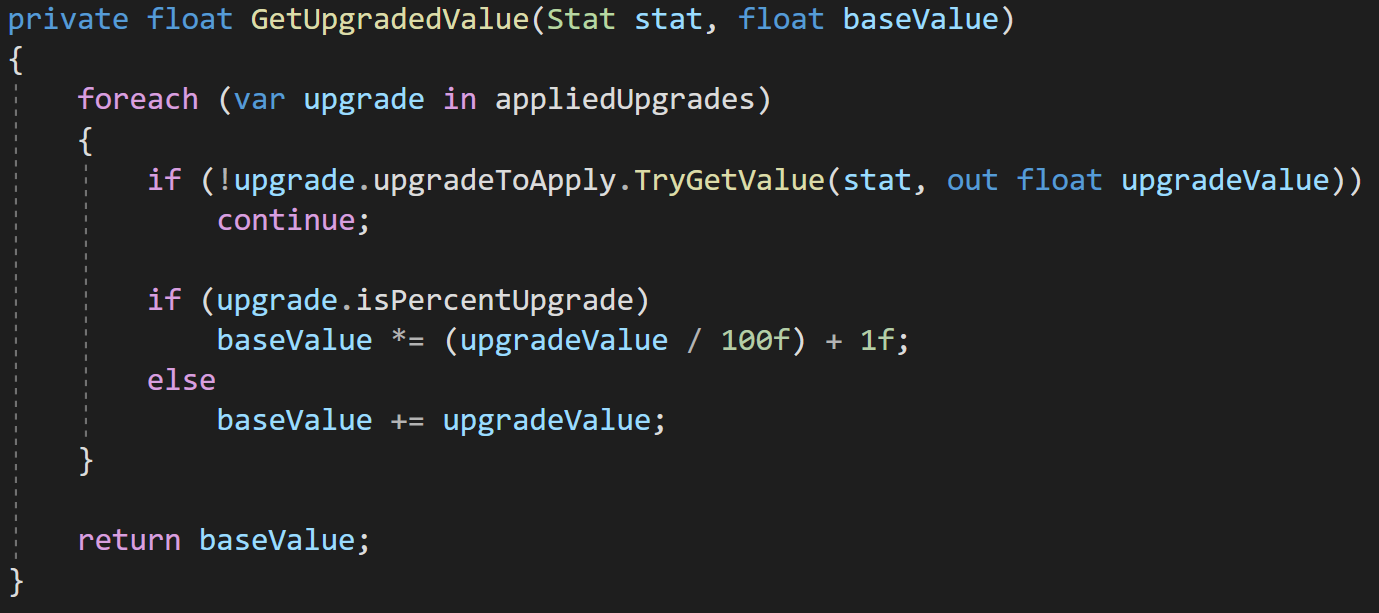

Inside this new function, we loop through all the applied stat upgrades and check if any of those stat upgrades apply to the current stat type - by looking for that stat type in the dictionary of upgrades to apply.

If we find an upgrade, we apply the value to the stat. If the upgrade was a percent upgrade the math is a bit different but the idea is the same. When we’re done looping through all the possible upgrades we return the value to the GetStats function.

I like this approach as the entire upgrade path is dealt with by the StatsUpgrade and Stats classes. Nothing outside these classes needs to know or even cares what’s going on - keeping things nice and tidy.

Fixing Loose Ends

#1 There is one problem in the current system which comes from using scriptable objects and modifying them in the Unity editor. When applying an upgrade by adding it to a list during play mode that upgrade will still be part of the list when you leave play mode. Meaning you could accidentally ship your game with an upgrade pre-applied which is less than awesome. This is the same reason, I choose to recalculate the upgraded stat value each time the value is requested rather than simply setting the value.

The solution to this is pretty simple. We just need to clear the list of applied upgrades when we enter or exit play mode.

There are no “lifetime” functions like OnEnable or OnDisable for scriptable objects so where and how this function gets called is really up to you. I haven’t come up with a particularly clever solution, if I do I’ll post it here, but for now, my implementation is to simply have a Stats manager that will call the reset function on each stat object when going into play mode. This same stats manager could also tie into a future save system so that applied upgrades can be saved and restored in standalone builds.

If you come up with a more clever solution I’d love to hear about it in the comments below or on the OWS discord.

Reminder: Scriptable objects are not suitable for a save system. So don’t try and turn this “problem” into a janky-ass save system. It just won’t work.

#2 There is an edge case where an upgrade may be applied to one of the “instance stats.” For example, let’s say we get an upgrade that adds 20 hit points to our tanks. With no further modification, any tank that is added AFTER the upgrade is applied should see the extra 20 hit points, but tanks that are already in the scene won’t. Maybe that’s okay or even desired, but in my game not so much.

So here is my suggested fix. It’s not the tidiest, but it works. I’m going to add a public non-static event to the Stats class that will get invoked when an upgrade is applied. Any class that might care about an upgrade can then be notified - which could be useful for functionality such as SFX or VFX or other player feedback to let them know an upgrade has been applied to a particular unit.

Changes to the Stats class

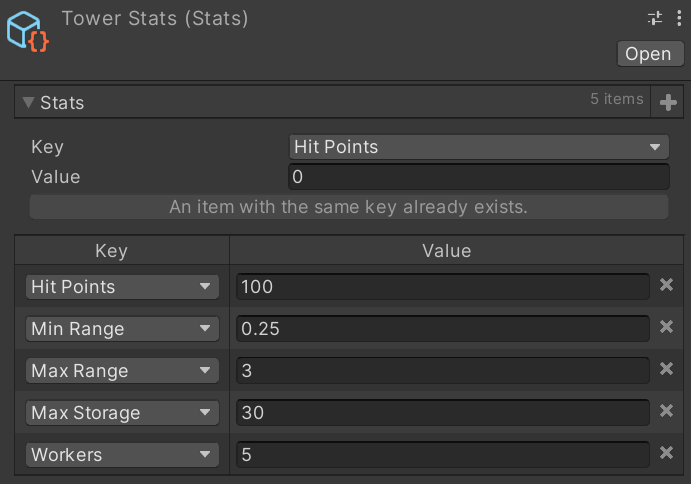

A class subscribing to the new event to process the upgrade

Stats in Unity - The Way I Do it

The goal of this post is to show how I did stats and hopefully give you ideas or at least a starting point to build your own system - your needs are probably different than mine.

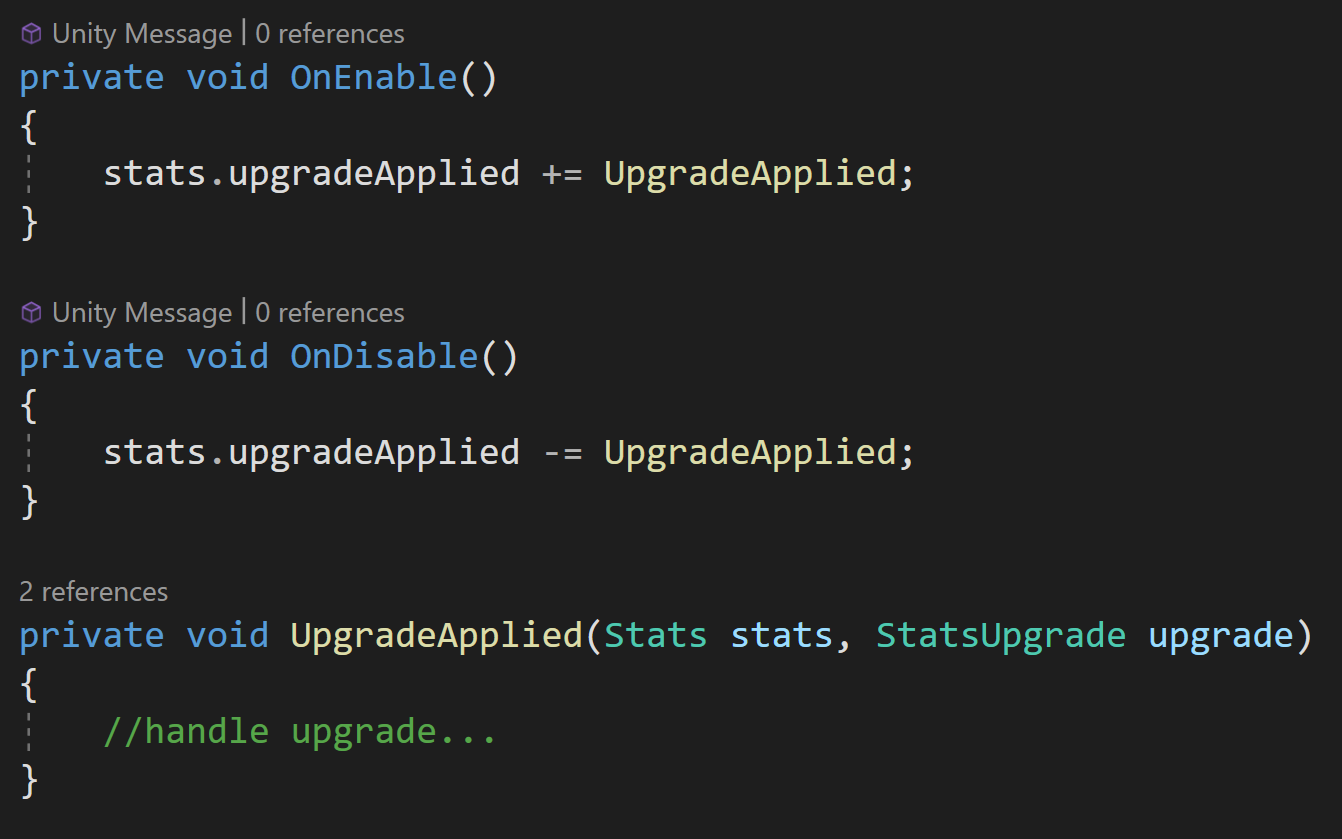

A WIP, but the buildings need stats

All games need stats in some shape or form. Maybe it’s just the speed of the player or maybe it’s full-blown RPG levels stats for players, enemies, NPCs, weapons, and armor.

For my current personal project, I knew I was going to need stats on the buildings as well as the enemy units.

A good way to do this is to create a non-monobehaviour stats class that has a public field for every stat. Then all classes that need stats can have a public stats field and you’re good to go. For a lot of uses, this might be more than enough.

Making the stats class a non-monobehavior class it can help enforce some good composition over inheritance type of structure.

While this is good, I wanted something more. The goal of this post is to show how I did stats and hopefully give you ideas or at least a starting point to build your own system - it’s likely that your needs are a bit different than mine.

I wanted something generic. I wanted different units to have different types of stats. I wanted a quick and easy way to get values of stats - without creating all kinds of functions or properties to access individual stats. And lastly, I wanted a stat system that could work with an upgrade system with similar ease and adaptability.

My implementation of an upgrade system will get shared in a follow-up post.

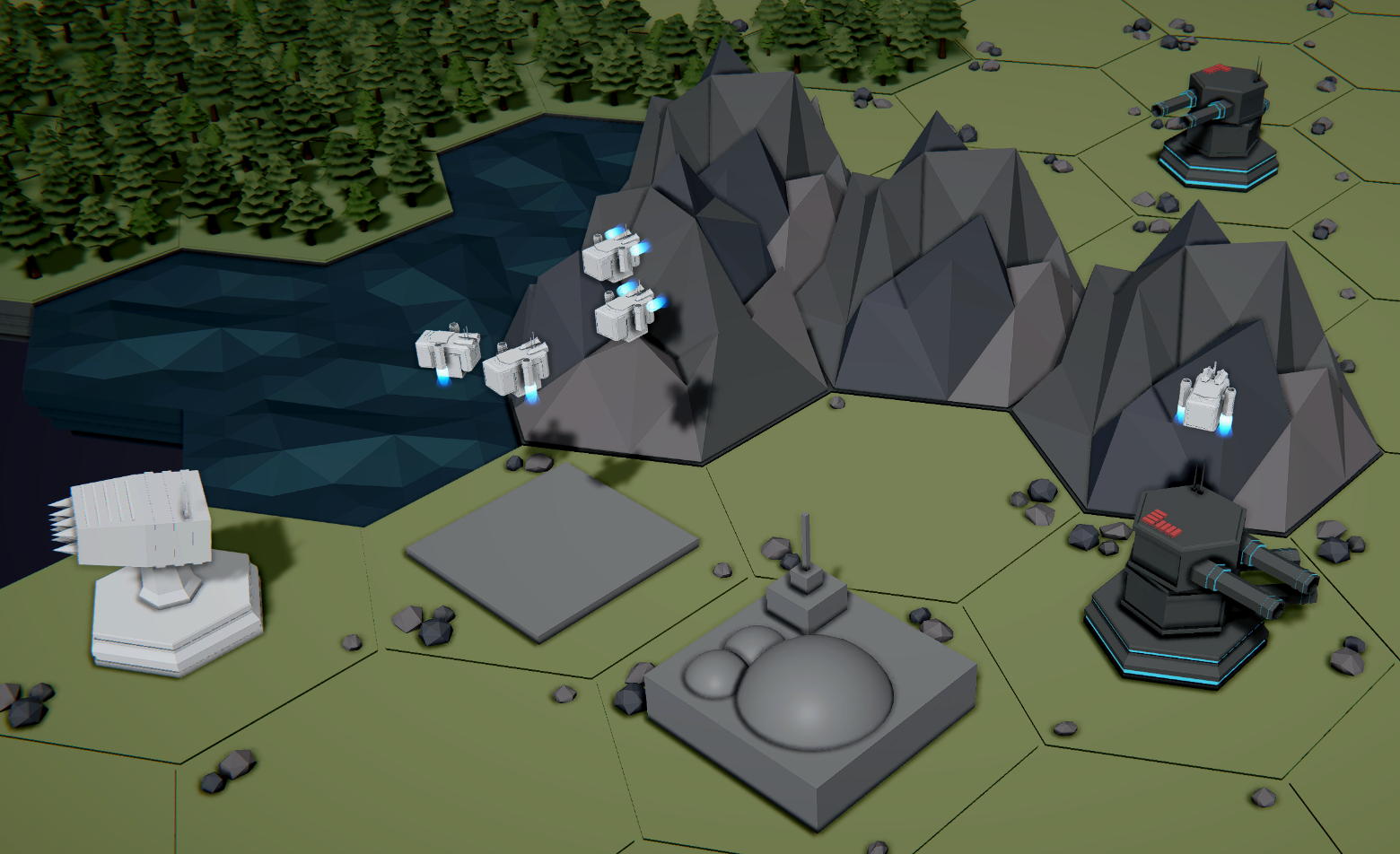

My stats. Easy to use. Easy to Create

Stats in a Collection

Having my stats in a collection (a dictionary in my case, but lists also work) means I can easily add stat types and adjust stat values for a given type of unit. While I very much have a love-hate relationship with assigning values in the inspector - this is a win in my book all day every day.

This was a crucial piece of the puzzle given that not all units will have the same types of stats - farmer and defensive towers have very different functions and so they need different stats!

Using Scriptable Objects

I choose to use a scriptable object (SO) as the container for my stats. This keeps my data separate from the logic that uses the data. Which I like.

It also means that each stats container is an asset and can be shared with any object that needs access to it. It works project-wide.

For example, every “tower” has access to the same stats object. If the UI needs to display stats - they access the same SO. This reduces the need to duplicate information and more importantly reduces possible errors or needs to keep “everything up to date” - the UI and units always have the same values.

Upgrades can also be easy. Apply an upgrade to the stats object and every tower gets it. No need to hunt through the scene for objects of a certain type to apply the upgrade. Additionally, if I apply an upgrade in Level 1, that upgrade can easily transfer over to Level 2 as it can be applied to the SO. Pretty handy.

Important Note: Scriptable objects can be used to transfer data from one scene to another. BUT! They are not a save system and changes in an SO will not persist when leaving play mode - just like changes to a monobehaviour.

As a slight tangent, I also like that the SO, at some level defines the characteristics of the object. For example, in my project I want players to be able to choose a leader at the start of a new game or even at the start of a new level - the leaders effectively act as a global upgrade. By having each leader’s stats on a SO, I can simply drop the leader’s SO into whatever script is handling the leader’s logic and the effect is a change in leadership - a strategy pattern-like effect. The same effect can be had with stats - depending on your exact implementation.

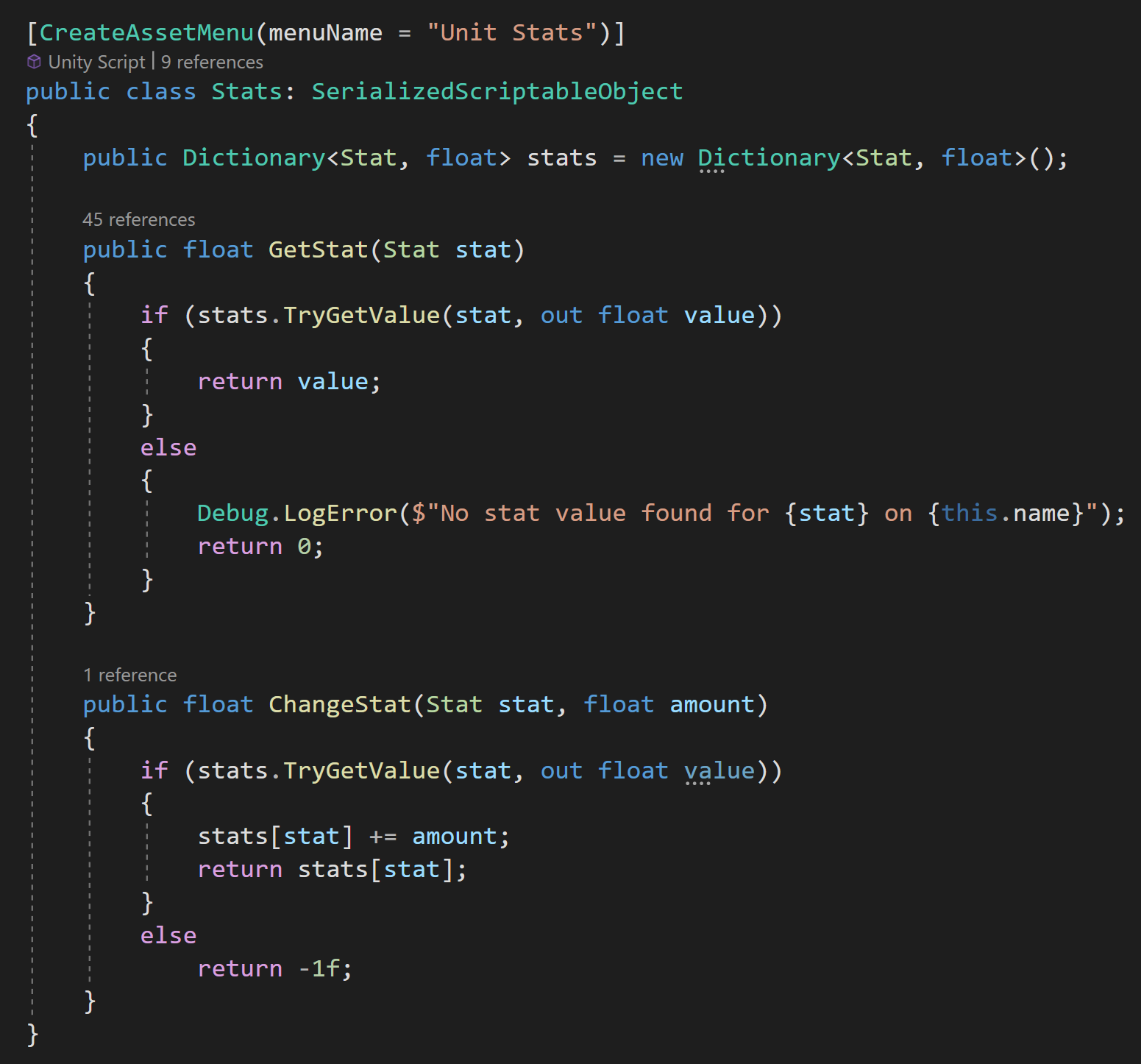

Quick Stat Look-Up

Putting my stats in a dictionary with an enum for the key and the value holding the stat value makes for a quick and easy method to access a given stat. No need to create properties. All I need is one public function that takes in the stat type and returns the value. This continues to work if I add or remove a stat type making my system a little less brittle.

This approach does mean that a stat could be requested and that it isn’t in the dictionary. If this is the case, I return zero and send an error to the console. Ideally, this isn’t happening, but with this implementation, nothing breaks, and as the developer, I get a message letting me know that I either asked for the wrong stat or I haven’t created a stat type for a given unit. Again, nice and clean.

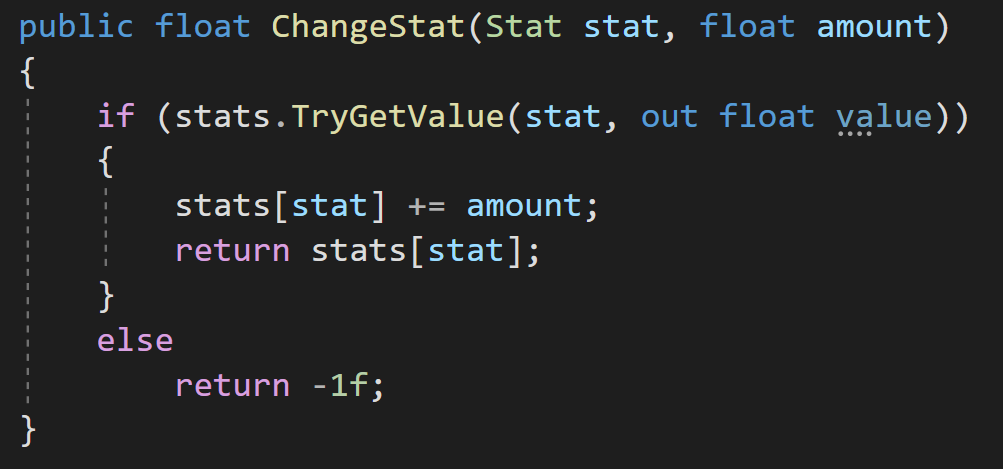

Changing Stat Values

Similarly, if you need to change a stat on the fly - a potential path for an upgrade system - a single public function can again be used. Once again remembering that changes to the SO won’t persist out of play mode.

In this case, I chose to return a negative value if the stat couldn’t be found… Would zero have been better? Maybe. Depends on your use case. I chose negative as things like hit points can be zero without something being wrong.

Potential Issues

For those who are paying attention, there are at least two potential issues with this system.

The Dictionary

You may have noticed that my scriptable object is actually a “SerializedScriptableObject” which isn’t a class built into Unity. Instead, it’s part of Odin Inspector and it allows the serialization and display of dictionaries in the Unity inspector. Without this class, you can’t see the dictionary in the inspector and you can’t add stats in the inspector… It’s a potential problem. There are at least two workarounds - short of buying Odin.

Fix #1

Use a list instead of a dictionary. You would need to create a custom class that has fields for the stat type and the stat value. Then you would need to alter the GetStat() and ChangeStat() functions to iterate through the list and find the result.

A bit messier, but not too bad. If you are concerned about the performance of the list vs a dictionary, while there is definitely a difference, the extra time to iterate through a list of 5, 10, or 20 stat types is marginal at best for most use cases.

Fix #2

But if you insist on using a dictionary, the second fix would be to use your list to populate a dictionary at runtime and then use that dictionary during play mode. This could be done in an initialized function or the first time that a stat is requested. A bit messier, but definitely doable.

The Scriptable Object

Having every unit of a type share stats is a good thing. Unless of course, a stat needs to be for the individual instance. Something like hit points or health. In those cases, we have a problem and need to work around it. So let me propose a couple of solutions.

Fix #1

Have each unit instantiate a copy of the SO when the unit is created. This makes the original SO just a template and each object will have its own copy. This breaks the “every unit of a type shares the same stats” idea, but it means that every unit of a type starts with the same stats.

This effectively means that the SO tracks max values or starting values while the object itself tracks the current value of the stat.

This is the method I have used in my project to prevent all units of a type from sharing health, but unfortunately, it will likely break my upgrade system moving forward. So….

Fix #2

Or you could create an additional dictionary (or list) of stats on the SO that should be copied onto the instance. Then functions such as a DoDamage() that change the value of a local or instance stat simply change the local value instead of changing the value on the SO.

This is likely my preferred solution moving forward as the SO still defines all stats for the object while individual objects have control of their instanced stats.

State of UI in Unity - UI Toolkit

UI Toolkit. It’s free. It’s built into the Unity game engine. It promises way more functionality than UGUI. Sounds perfect, right?

Well. Maybe. Turns out it just isn’t that simple.

So let’s talk about UI Toolkit.

A Bit of History

There is a huge elephant in the room when it comes to UI Toolkit. That being the development timeline.

UI Toolkit, or rather UI elements, was announced sometime in 2017 (I believe) with a roadmap released early in 2018 and the first release with some functionality came in Unity 2019.1. This was all leading to the goal of having parity with UGUI with the release of Unity 2020.3.

While UI Toolkit has come a long way - it’s still not able to fully replace UGUI. The latest official release from Unity says UI Toolkit is in maintenance mode through the Unity 2022 cycle and no new features will be added until the release of Unity 2023.

Based on the recent Unity release pattern, this means we likely won’t be seeing a feature-complete UI Toolkit until sometime in the calendar year 2024. That’s 6-7 years from announcement to being feature complete. 7 years ago I was still pretty fast on a mountain bike…

The Pro’s

UI Toolkit offers far better control of UI layout than UGUI. Many of the layout features that I find so attractive about Nova UI are either built into UI Toolkit or are on the roadmap. On top of that, UI Toolkit can be used to create custom editor windows, which neither UGUI nor Nova UI can or will ever do.

Plus! And this is no small thing, UI Toolkit allows styles or themes to be defined and reused. It’s no secret if you’ve watched any of my recent streams, I find color choice and visual design really really hard. With UGUI if you want to tweak the color of a button background, you either make prefabs, which can work sometimes, or you have to change each and every button manually. I hated this workflow so much that I built a janky - but effective - asset to let me define UGUI styles. While Nova is working on themes, they aren’t ready or available for developers just yet.

UI Toolkit also promises far better performance than UGUI - much of it is done behind the scenes with little effort from the developer. With UGUI if you change an element of a canvas the entire canvas has to be redrawn - which isn’t an issue with simple UI but can become a significant issue with more complex designs when you are trying to eke out every little bit of performance.

Despite big significant differences in how to create the UI, to Unity’s credit, much of the programming with UI Toolkit should feel familiar to folks comfortable working with C# and UGUI. While there will certainly be some nuance and naming differences, programming the interactivity of UI Toolkit should not be a major hurdle.

And of course the last big win for UI Toolkit? It’s free! For a lot of folks that right there is all the reason to ignore Nova UI and give Unity’s newest solution a serious go.

Stolen from a Unity Video On UI Toolkit

The Con’s

The biggest question about UI Toolkit is will it ever be done? Will it be feature complete? Will it truly have parity with UGUI? How much will change in the process? Will those changes break your project?

There are two big and commonly used UI features that UI Toolkit doesn’t have (yet). First is world space UI. If you don’t need it. Not a big deal. The second incomplete feature is UI animation. Some is supported but not all. Is this a problem? Maybe? Depends on your project.

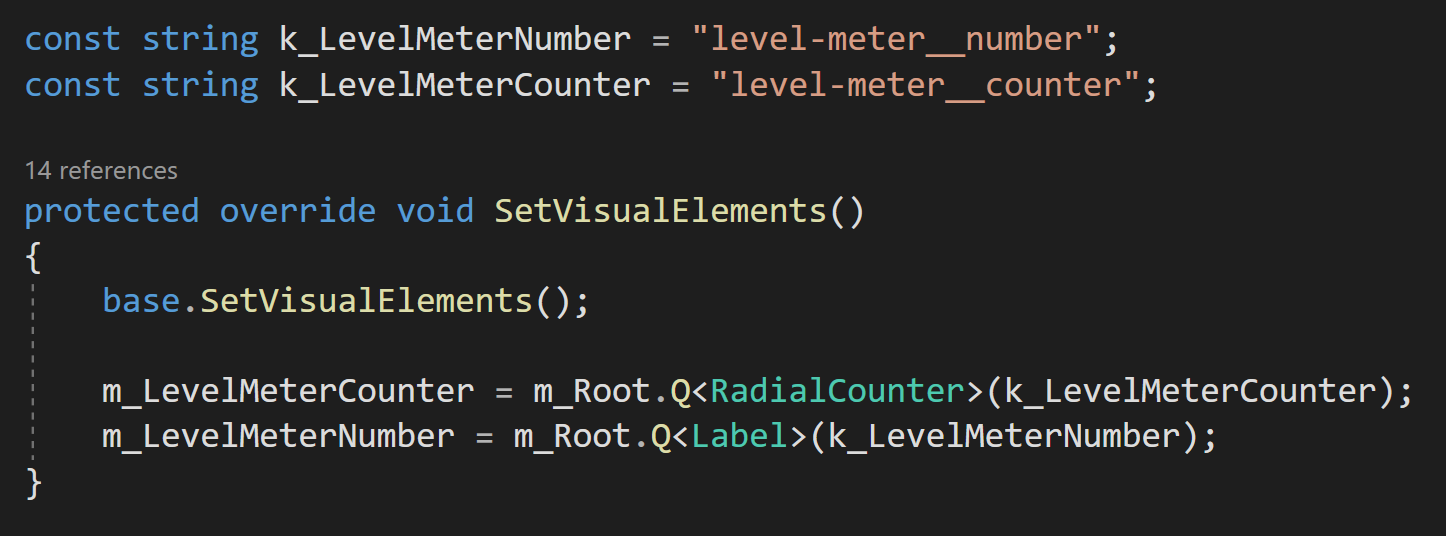

Data binding with UI Toolkit, is less than awesome. Finding objects by name? Using strings? This doesn’t feel sustainable or scalable or at the very least it’s just not a pleasant way to work. Even the devs have commented about it and are planning to revamp it with a more generic solution. What exactly that means? We’ll have to see.

With any choice of system, you need to look carefully at what it can do, and what it can’t do, and compare that to what you need to do.

The Toss Up’s

The workflow and design of UI Toolkit largely reflect web design and workflow. Is that a pro? Is that a con? That depends on your experience and maybe your willingness to learn a new approach. For me and I suspect many others this is the deciding factor. UI Toolkit feels TOTALLY different than the UGUI or Nova workflow. The pattern of gameObjects, components, and prefabs is replaced with USS, UXML, and UI documents.

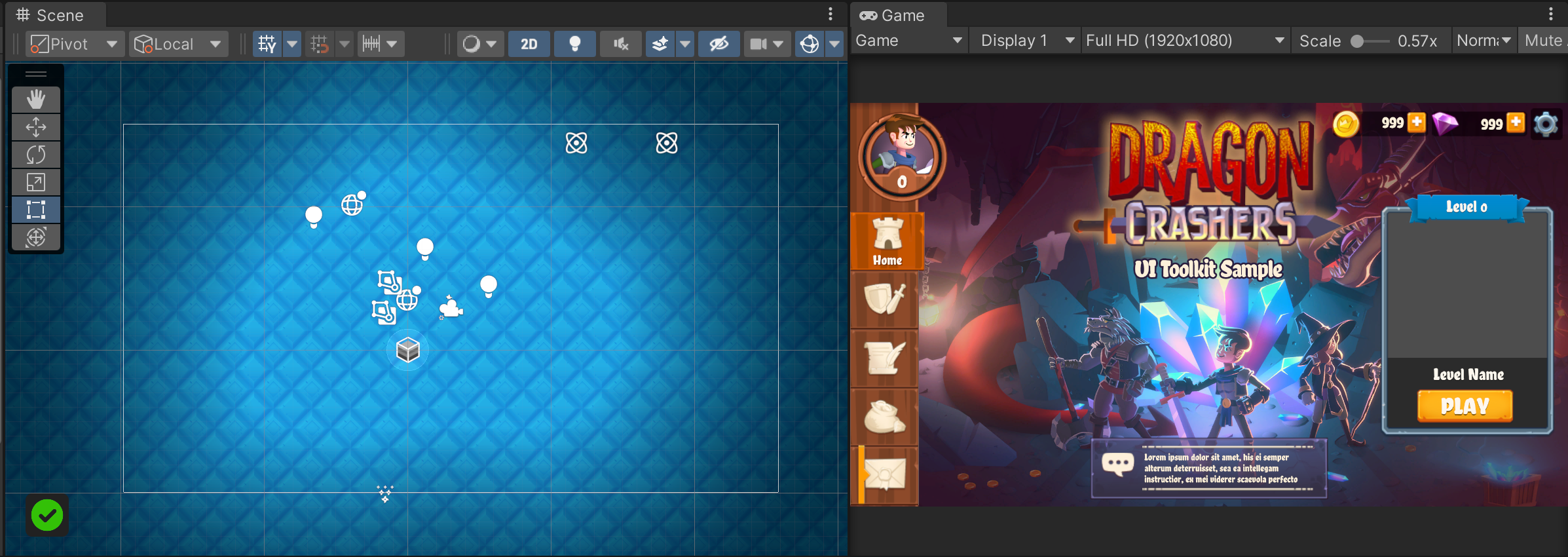

Also, the UI is also no longer really part of the scene - in the sense that you can’t see it in the scene view and it’s really in its own container. The UI elements are NOT scene objects. Again, it’s different which isn’t good or bad but it is really different.

For some, these are the exact reasons to go with UI Toolkit. For others, they’re the perfect reasons to stay with UGUI or Nova UI.

The sceneview (left) vs the GameView (right)

An Experiment

The results. Can you which result came from which UI tool?

I felt like if I was going to make a second video talking about UI options in Unity I really needed to have SOME comparison of the tools. So I set out to make the “same” ui with UGUI, UI Toolkit, and Nova UI. I wanted to create a slider that changed the alpha of an image and buttons that would collapse text. Nothing too fancy, but functions or similar to functions used in a lot of projects.

I spend about a bit longer with UGUI (18:02) than with Nova UI (17:39) and as expected, due to my lack of knowledge and experience, far longer with UI Toolkit (56:16). Those times are based on recording the process with each tool. You can see the final results in the video to the right.

In all cases, default or included assets were used. No formatting of colors, textures, or fonts was intentionally done.

I KNOW that it is possible to make this UI with all three components. The point was not to say one tool is better than another. The point was just to experience each tool and the process of using each tool. That said, for me, with my experience, my brain, and my understanding it was clear that one tool, in particular, is easily the best choice in terms of the workflow and the quality of the final result.

Let me explain more…

My Thoughts on UI Toolkit

I have zero experience with modern web development or design. I’d like to think I can learn and adapt to a new system, but I can’t explain just how foreign UI Toolkit felt to me. Sure I could drag and drop things into the UI Builder and mess around with values in the inspector, but after spending a few hours (more than just the testing above) with it I had way more questions than answers. I was playing and experimenting with Unity’s Dragon Crashers project - I had examples in front of me but I still very much struggled to see how it all worked and connected.

For example, there is a nice-looking slider in the library. It works. There are lots of great options and adjustments in the inspector. But for the life of me, I could not figure out how to scale it on the vertical axis. The horizontal axis, no problem, but make it thicker? Nope.

Video of a UI Toolkit slider at 30 fps…

I did some googling and found the same question online with no posted answer. Now clearly there is an answer. There is a way to do it. But it’s a way that I couldn’t figure out.

And then there’s the UI builder window. There’s no way to sugarcoat it, the performance of UI builder was horrible. I don’t know how else to say it. With just a few elements it’s a non-issue. But load up the main menu of Dragon Crashers and slide a few values around and the editor becomes nearly unusable. You can see the lag in the video to the right. I saw similar results in my simple “experiment” use case too.

Just to make sure I wasn’t crazy or being overly critical I opened up the profiler to do some testing. Sure enough, there’s a HUGE lag spike while dragging a value in the UI Builder inspector. This isn’t a deal breaker, but it sure makes the tool harder to use.

UI Builder lag

Strings? Really? Why?

Then I ran into my active disdain for strings. I will freely admit that when I saw that strings were being used to get references to UI elements I was really put off.

Why? Why do it that way? Why lose strongly typed references? Maybe this is how web development is done and folks are used to it. But this feels like a step backward.

The dev team agrees or at least sees it as an area to improve, so they are looking into “more generic” solutions, but right now those solutions don’t exist and who knows when or if they will materialize.

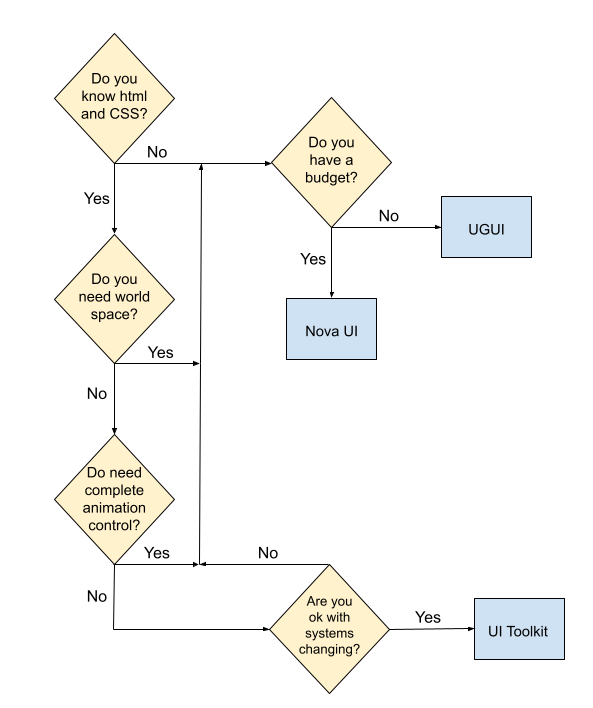

So Should You Use It?

In my mind, trying to decide if UI Toolkit is the right solution comes down to a handful of questions (and a lovely flow chart).

Do you know and like web design workflows?

Do you need world space UI?

Do you need complete control of UI animations?

Are you okay with the UI system changing?

Final Thoughts

Options are good to have. I see UGUI, UI Toolkit, and Nova UI each as viable UI solutions depending on the needs of the project and the skill set of the developers. Each has shortcomings. None of them are perfect.

UI Toolkit is in this weird alpha/beta mode where it’s been released, but not feature-complete and has potential breaking changes coming in the future. Which means much of the tutorial information out there is outdated. It also doesn’t give content creators a good incentive to make more than just introductory content. This makes it harder for the average user to get up to speed. Unity keeps doing this and feels so counterproductive!

But here’s the best part of the situation. All three of these solutions can be tried for free. Nova has a free version while UGUI and UI Toolkit are shipping with recent versions of Unity. So my advice? Try them. Play with them. Do an experiment like I did. Find the right tool for you and your project. I have my UI solution. I love it. But that doesn’t mean it’s the right solution for everyone.

Older Posts

-

April 2024

- Apr 10, 2024 Ready for Steam Next Fest? - Polishing a Steam Page Apr 10, 2024

- Apr 1, 2024 Splitting Vertices - Hard Edges for Low Poly Procedural Generation Apr 1, 2024

-

November 2023

- Nov 18, 2023 Minute 5 to Minute 10 - Completing the Game Loop Nov 18, 2023

-

September 2023

- Sep 13, 2023 Visual Debugging with Gizmos Sep 13, 2023

-

July 2023

- Jul 4, 2023 Easy Mode - Unity's New Input System Jul 4, 2023

-

May 2023

- May 19, 2023 Level Builder - From Pixels to Playable Level May 19, 2023

-

April 2023

- Apr 11, 2023 Input Action in the Inspector - New Input System Apr 11, 2023

-

February 2023

- Feb 26, 2023 Tutorial Hell - Why You're There. How to Get Out. Feb 26, 2023

-

December 2022

- Dec 31, 2022 Upgrade System (Stats Part 2) Dec 31, 2022

-

November 2022

- Nov 10, 2022 Stats in Unity - The Way I Do it Nov 10, 2022

- Nov 5, 2022 State of UI in Unity - UI Toolkit Nov 5, 2022

-

August 2022

- Aug 17, 2022 Knowing When A Coroutine Finishes Aug 17, 2022

-

April 2022

- Apr 23, 2022 Unity Input Event Handlers - Or Adding Juice the Easy Way Apr 23, 2022

-

March 2022

- Mar 15, 2022 *Quitting a Job I Love Mar 15, 2022

-

February 2022

- Feb 8, 2022 Split Screen: New Input System & Cinemachine Feb 8, 2022

-

January 2022

- Jan 24, 2022 (Better) Object Pooling Jan 24, 2022

- Jan 19, 2022 Designing a New Game - My Process Jan 19, 2022

- Jan 16, 2022 Strategy Game Camera: Unity's New Input System Jan 16, 2022

-

December 2021

- Dec 16, 2021 Raycasting - It's mighty useful Dec 16, 2021

-

November 2021

- Nov 22, 2021 Cinemachine. If you’re not. You should. Nov 22, 2021

-

August 2021

- Aug 3, 2021 C# Extension Methods Aug 3, 2021

-

June 2021

- Jun 27, 2021 Changing Action Maps with Unity's "New" Input System Jun 27, 2021

-

May 2021

- May 28, 2021 Unity's New Input System May 28, 2021

- May 8, 2021 Bolt vs. C# - Thoughts with a dash of rant May 8, 2021

-

March 2021

- Mar 10, 2021 Coroutines - Unity & C# Mar 10, 2021

-

January 2021

- Jan 14, 2021 Where's My Lunch? - January Devlog Update Jan 14, 2021

-

December 2020

- Dec 27, 2020 C# Generics and Unity Dec 27, 2020

- Dec 7, 2020 Steam Workshop with Unity and Facepunch Steamworks Dec 7, 2020

-

November 2020

- Nov 27, 2020 Simple Level Save and Load System (Unity Editor) Nov 27, 2020

- Nov 9, 2020 Command Pattern - Encapsulation, Undo and Redo Nov 9, 2020

-

October 2020

- Oct 28, 2020 GJTS - Adding Steamworks API and Uploading Oct 28, 2020

- Oct 9, 2020 Game Jam... Now What? Oct 9, 2020

-

August 2020

- Aug 16, 2020 Strategy Pattern - Composition over Inheritance Aug 16, 2020

-

July 2020

- Jul 24, 2020 Observer Pattern - C# Events Jul 24, 2020

- Jul 15, 2020 Object Pooling Jul 15, 2020

- Jul 3, 2020 Cheat Codes with Unity and C# Jul 3, 2020

-

June 2020

- Jun 16, 2020 The State Pattern Jun 16, 2020

-

August 2019

- Aug 12, 2019 Easy UI Styles for Unity Aug 12, 2019

-

July 2019

- Jul 3, 2019 9th Grade Math to the Rescue Jul 3, 2019

-

June 2019

- Jun 12, 2019 Introducing My Next Game (Video DevLog) Jun 12, 2019

-

May 2019

- May 29, 2019 Programming Challenges May 29, 2019

-

March 2019

- Mar 2, 2019 Something New - Asking "What Can I Learn?" Mar 2, 2019

-

November 2018

- Nov 30, 2018 A Growing Channel and a New Tutorial Series Nov 30, 2018

-

October 2018

- Oct 11, 2018 Procedural Spaceship Generator Oct 11, 2018

-

July 2018

- Jul 11, 2018 Implementing SFX in Unity Jul 11, 2018

-

May 2018

- May 31, 2018 Prototyping Something New May 31, 2018

-

April 2018

- Apr 17, 2018 When to Shelve a Game Project? Apr 17, 2018

-

February 2018

- Feb 9, 2018 State of the Game - Episode 3 Feb 9, 2018

-

December 2017

- Dec 16, 2017 State of the Game - Episode 2 Dec 16, 2017

-

November 2017

- Nov 7, 2017 The Bump From A "Viral" Post Nov 7, 2017

-

October 2017

- Oct 30, 2017 NPC Job System Oct 30, 2017

-

September 2017

- Sep 1, 2017 Resources and Resource Systems Sep 1, 2017

-

August 2017

- Aug 3, 2017 State of the Game - Episode 1 Aug 3, 2017

-

June 2017

- Jun 20, 2017 Resources: Processing, Consumption and Inventory Jun 20, 2017

- Jun 15, 2017 Energy is Everything Jun 15, 2017

-

May 2017

- May 16, 2017 Graphing Script - It's not exciting, but it needed to be made May 16, 2017

- May 2, 2017 Tutorials: Low Poly Snow Shader May 2, 2017

-

April 2017

- Apr 28, 2017 Low Poly Snow Shader Apr 28, 2017

- Apr 21, 2017 Environmental Simulation Part 2 Apr 21, 2017

- Apr 11, 2017 Environmental Simulation Part 1 Apr 11, 2017

-

March 2017

- Mar 24, 2017 Building a Farming Game Loop and Troubles with Ground Water Mar 24, 2017

-

February 2017

- Feb 25, 2017 The Inevitable : FTF PostMortem Feb 25, 2017

-

December 2016

- Dec 7, 2016 Leaving Early Access Dec 7, 2016

-

November 2016

- Nov 28, 2016 Low Poly Renders Nov 28, 2016

- Nov 1, 2016 FTF: Testing New Features Nov 1, 2016

-

October 2016

- Oct 27, 2016 Watchtowers - Predictive Targeting Oct 27, 2016

- Oct 21, 2016 Click to Color Oct 21, 2016

- Oct 19, 2016 Unity Object Swapper Oct 19, 2016

-

September 2016

- Sep 18, 2016 Testing Single Player Combat Sep 18, 2016

-

May 2016

- May 25, 2016 Release Date and First Video Review May 25, 2016

-

March 2016

- Mar 26, 2016 Getting Greenlit on Steam Mar 26, 2016