Designing a New Game - My Process

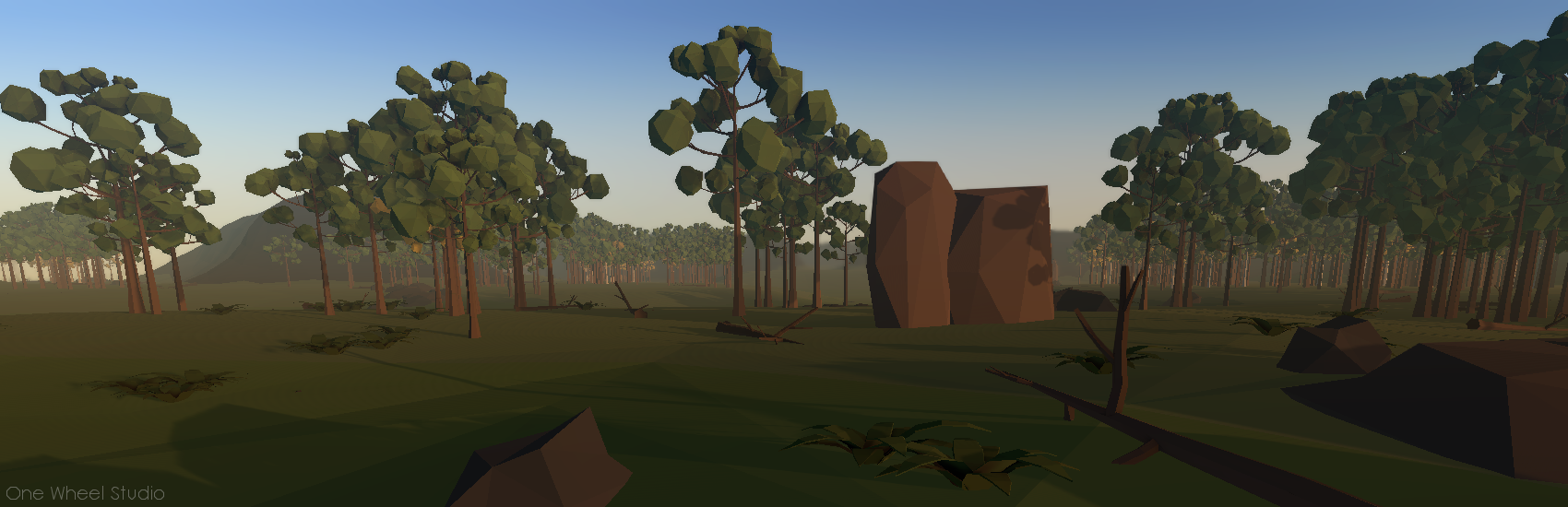

/Some of my projects….

This is my process. It’s been refined over 8+ years of tinkering with Unity, 2 game jams, and 2 games published to Steam.

My goal with this post is just to share. Share what I’ve learned and share how I am designing my next project. My goal is not to suggest that I’ve found the golden ticket. Cause I haven’t. I’m pretty sure the perfect design process does not exist.

So these are my thoughts. These are the questions I ask myself as I stumble along in the process of designing a project. Maybe this post will be helpful. Maybe it won’t. If it feels long-winded. It probably is.

I’ve tried just opening Unity and designing as I go. It didn’t work out well. So again, this is just me sharing.

TL;DR

Set a Goal - To Learn? For fun? To sell?

Play games as research - Play small games and take notes.

Prototypes system - What don’t you know how to build? Is X or Y actually doable or fun?

Culling - What takes too long? What’s too hard? What is too complicated?

Plan - Do the hard work and plan the game. Big and small mechanics. Art. Major systems.

Minimal Viable Product - Not the game just the basics. Is it fun? How long did it take?

Build it! - The hardest part. Also the most rewarding.

What Is The Goal?

When starting a new project, I first think about the goal for the project. For me, this is THE key step in designing a project - which is a necessary step to the holy grail of actually FINISHING a project. EVERY other step and decision in the process should reflect back on the goal or should be seen through the lens of that goal. If the design choice doesn’t help to reach the goal, then I need to make a different decision.

Am I making a game to share with friends? Am I creating a tech demo to learn a process or technique? Am I wanting to add to my portfolio of work? What is the time frame? Weeks? Months? Maybe a year or two (scary)?

I want another title in this list!

For this next project, I want to add another game to the OWS Steam library and I’d like to generate some income in the process. I have no dreams of creating the next big hit, but if I could sell 1000 or 10,000 copies - that would be awesome.

I also want to do it somewhat quickly. Ideally, I could have the project done in 6 to 9 months, but 12 to 18 months is more likely with the time I can realistically devote to the project. One thing I do know, is that whatever amount of time I think it’ll take. It’ll likely take double.

Research!

After setting a goal, the next step is research. Always research. And yes. I mean playing games! I look for games that are of a similar scope to what I think I can make. Little games. Games with interesting or unique mechanics. Games made by individuals or MAYBE a team of 2 or 3. As I play I ask myself questions:

What elements do I find fun? What aspects do I not enjoy? Do I want to keep playing? What is making me want to quit? What mechanics or ideas can I steal? What systems do I know or not know how to make? Which systems are complex? What might be easy to add?

Then there are three more questions. These are key and crucial in designing a game and can help to keep the game scope (somewhat) in check. Which in turn is necessary if a game is going to get finished

How did a game developer’s clever design decisions simplify the design? How does a game make something fun without being complex? Why might the developer have made decisions X or Y? What problems did that decision avoid?

These last questions are tough and often have subtle answers. They take thought and intention. Often while designing a game my mind goes towards complexity. Making things bigger and more detailed! Can’t solve problem A? Well, lets bolt-on solution B!

For example, I’ve wanted to make a game where the player can create or build the world. Why not let the player shape the landscape? Add mountains and rivers? Place buildings? Harvest resources? It would be so cool! Right? But it’s a huge time sink. Even worse, it’s complex and could easily be a huge source of bugs.

So a clever solution? I like how I’m calling myself clever. Hex tiles. Yes. Hex tiles. Let the player build the world, but do it on a grid with prefabs. Bam! Same result. Same mechanic. Much simpler solution. It trades a pile of complex code for time spent in Blender designing tiles. Both Zen World and Dorf Romantic are great examples of allowing the player to create the world and doing so without undue complexity.

Navigation can be another tough nut to crack. Issues and bugs pop up all over the place. Units running into each other. Different movement costs. Obstacles. How about navigation in a procedural landscape? Not to mention performance can be an issue with a large number of units.

My “Research” List

Creeper World 4 gets around this in such a simple and elegant way. Have all the units fly in straight lines. Hover. Move. Land. Done.

I am a big believer that constraints can foster creativity. For me, identifying what I can’t do is more important than identifying what I can do.

When I was building Fracture the Flag I wanted the players to be able to claim territory. At first, I wanted to break the map up into regions - something like the Risk map. I struggled with it for a while. One dead end after another. I couldn’t figure out a good solution.

Then I asked, why define the regions? Instead, let the players place flags around the map to claim territory! If a flag gets knocked down the player loses that territory. Want to know if a player can build at position X or Y? They can if it’s close to a flag. So many problems solved. So much simpler and frankly so much more fun.

With research comes a flood of ideas. And it’s crucial to write them down. Grab a notebook. Open a google doc. Or as I recently discovered Google Keep - it’s super lightweight and easy to access on mobile for those ah-ha moments.

I keep track of big picture game ideas as well as smaller mechanics that I find interesting. I don’t limit myself to one idea or things that might nicely fit together. This is the throwing spaghetti at the wall stage of design. I’m throwing it out there and seeing what sticks. Even if, maybe especially if, I get excited about one idea I force myself to think beyond it and come up with multiple concepts and ideas. This is not the time to hyper focus.

At this stage, I also have to bring in a dose of reality. I’m not making an MMO or the next E-Sports tile. I’m dreaming big, but also trying not to waste my time with completely unrealistic dreams. I should probably know how to make at least 70, 80 or maybe 90 percent of the game!

While you’re playing games as “research” support small developers and leave them reviews! Use those reviews to process what you like and what you don’t like. What would you change? What would you keep? What feels good? What would feel better? Those reviews are so crucial to a developer. Yes, even negative ones are helpful.

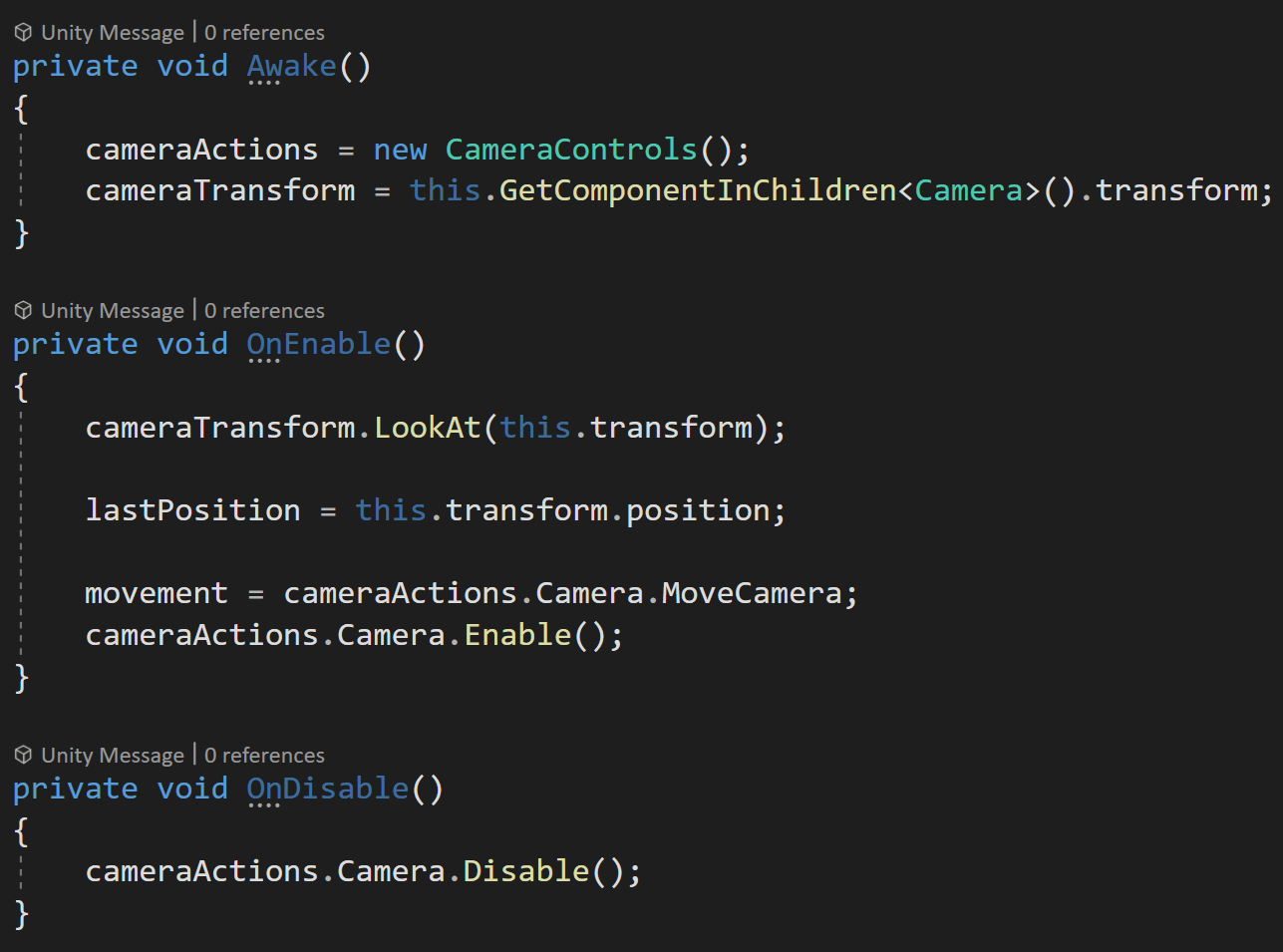

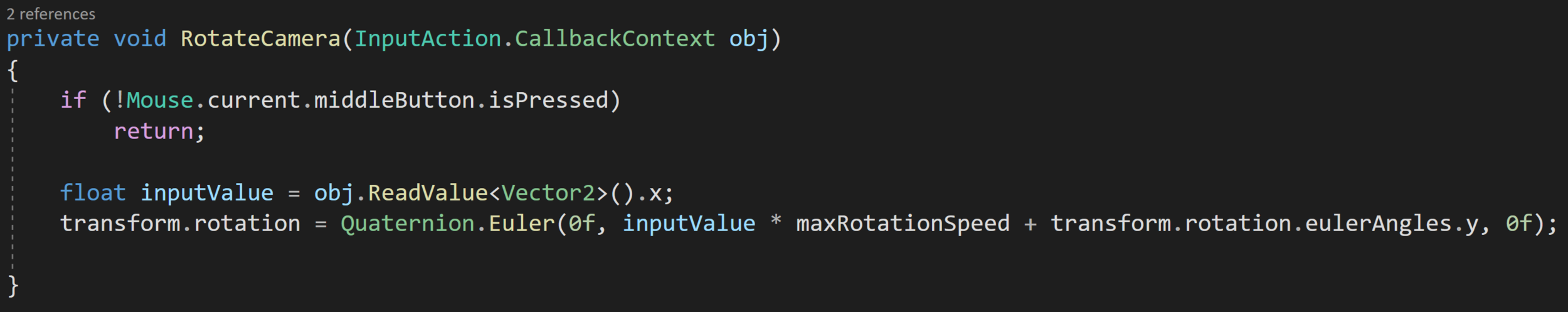

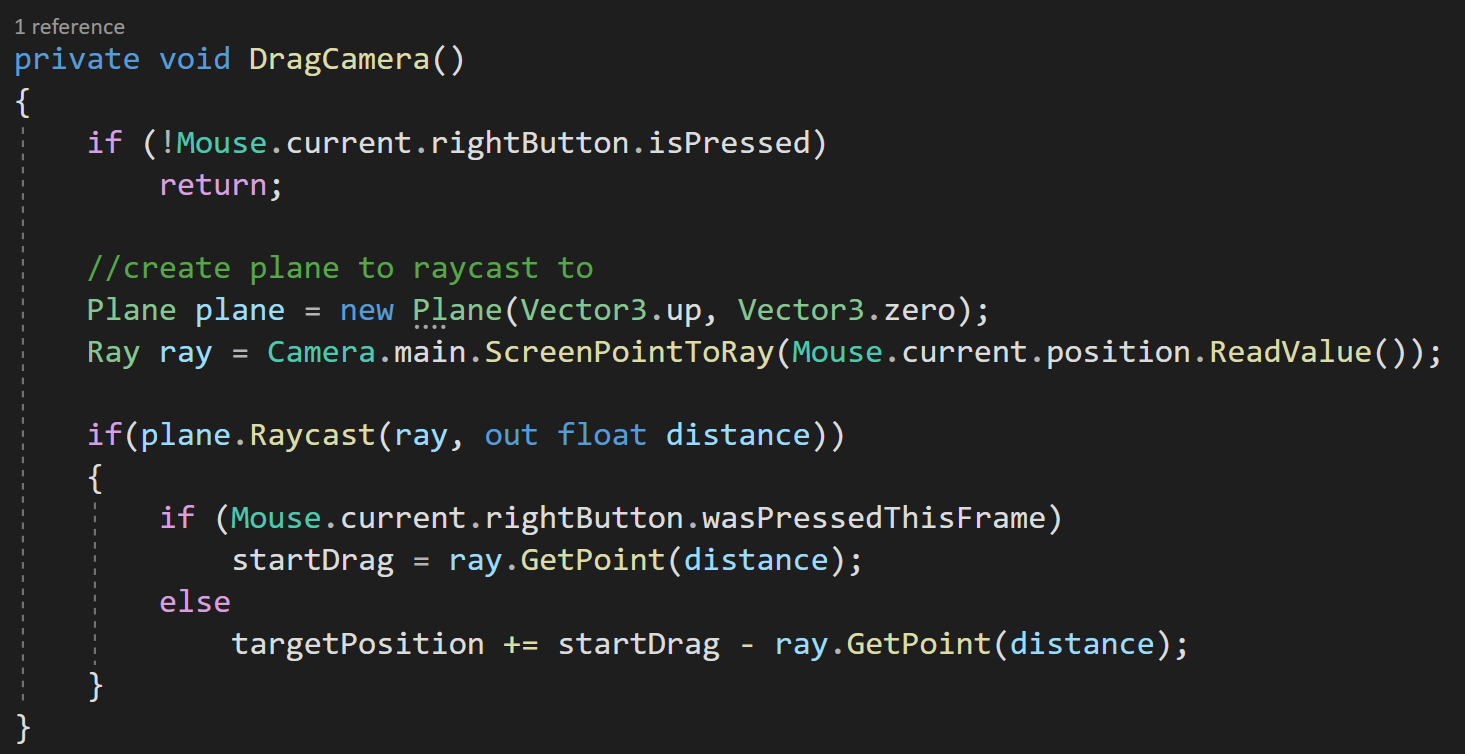

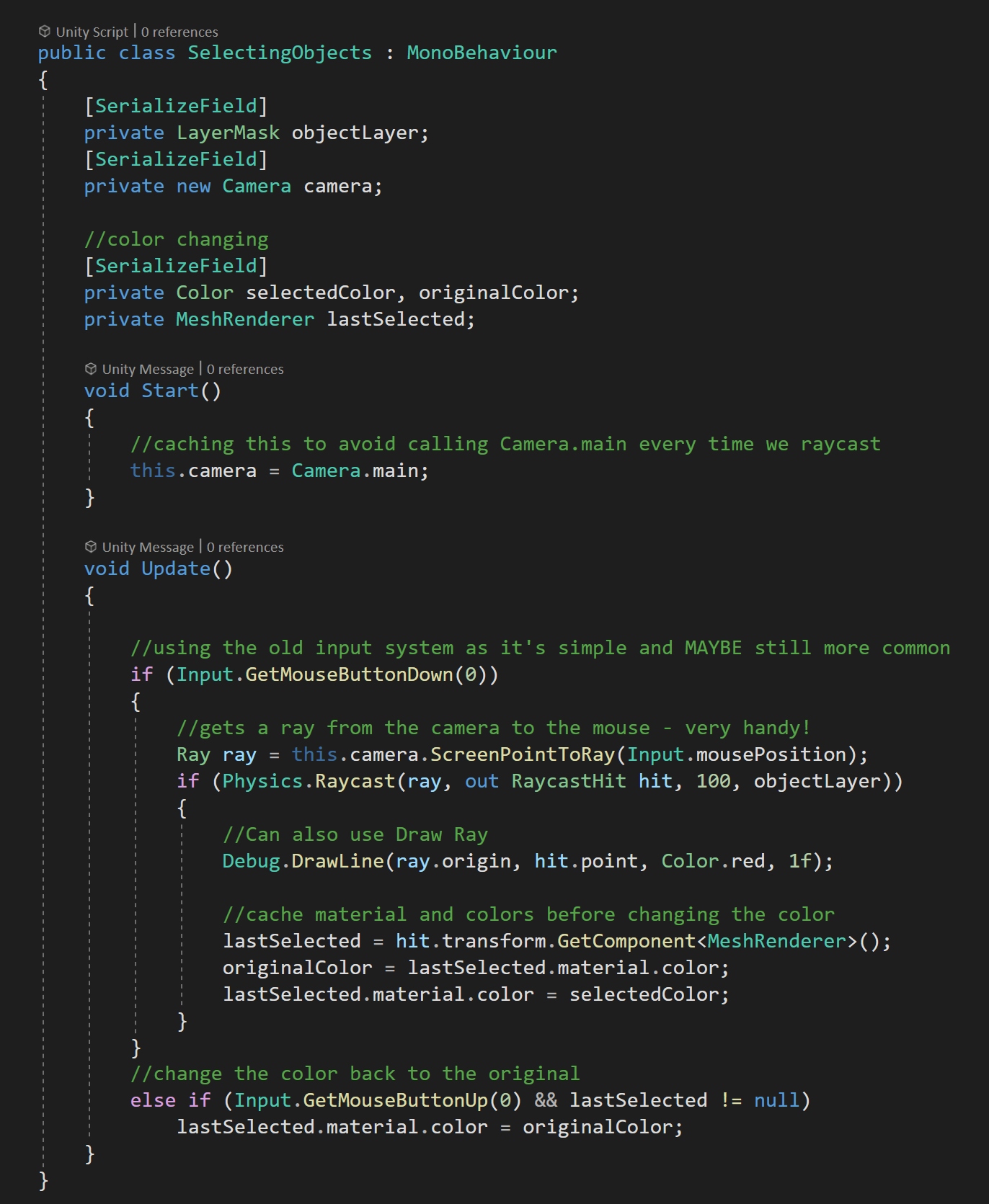

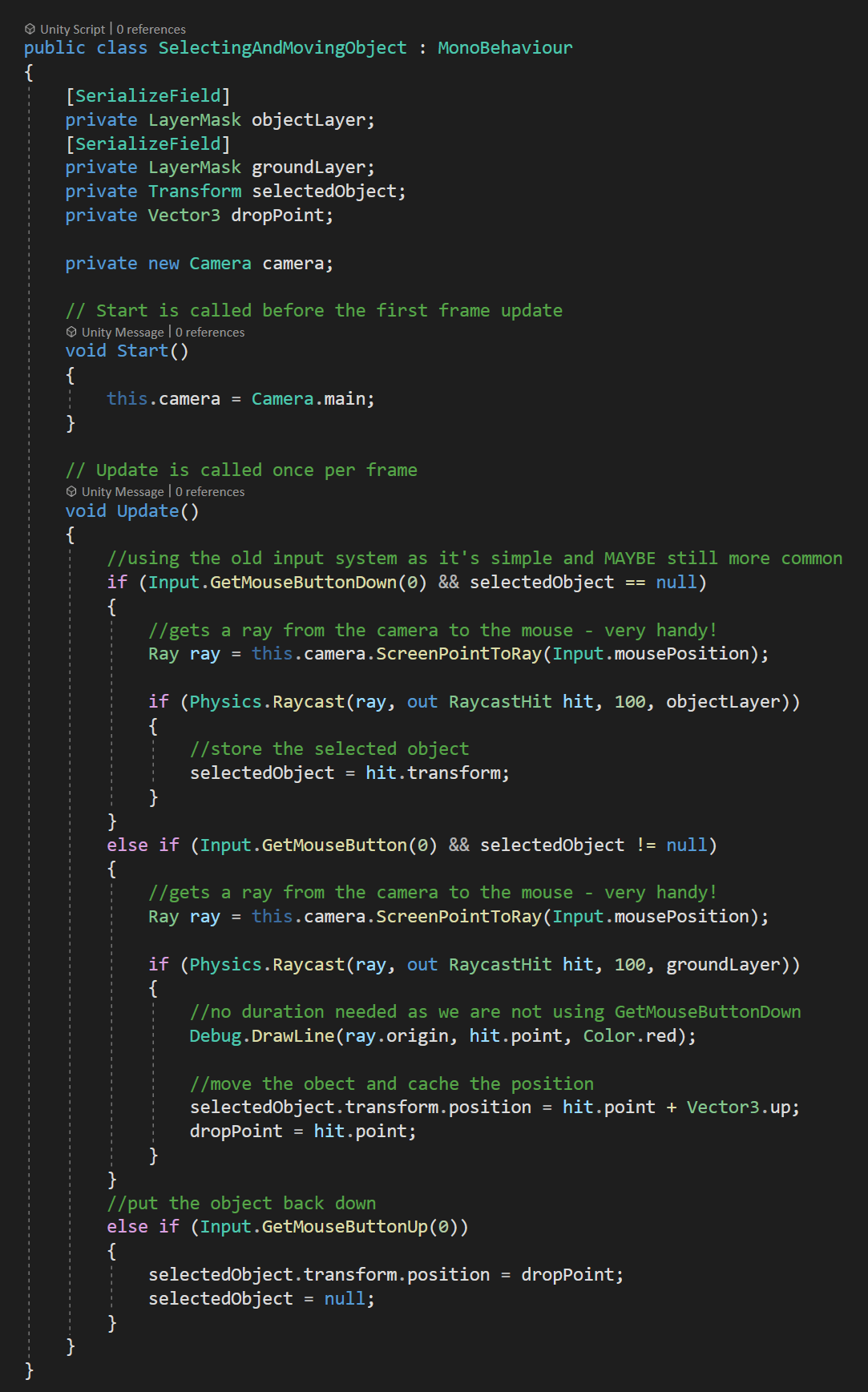

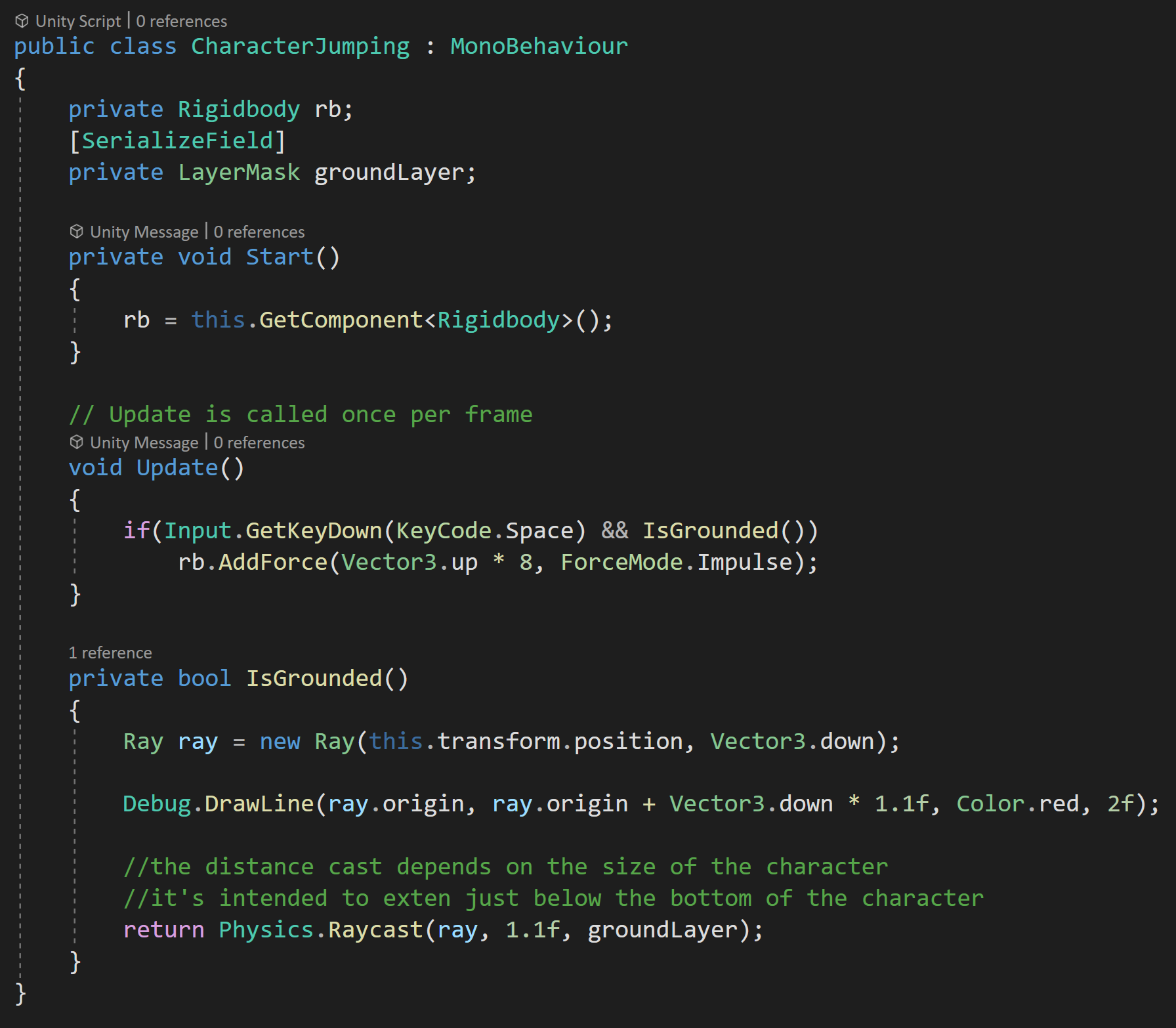

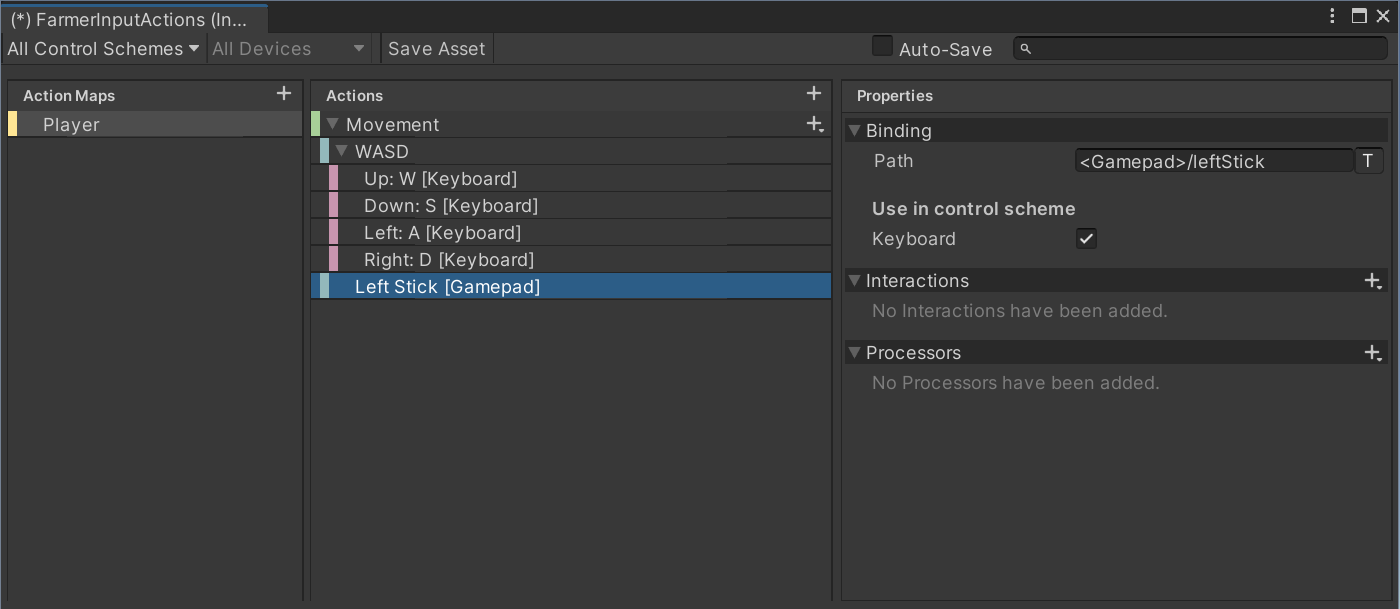

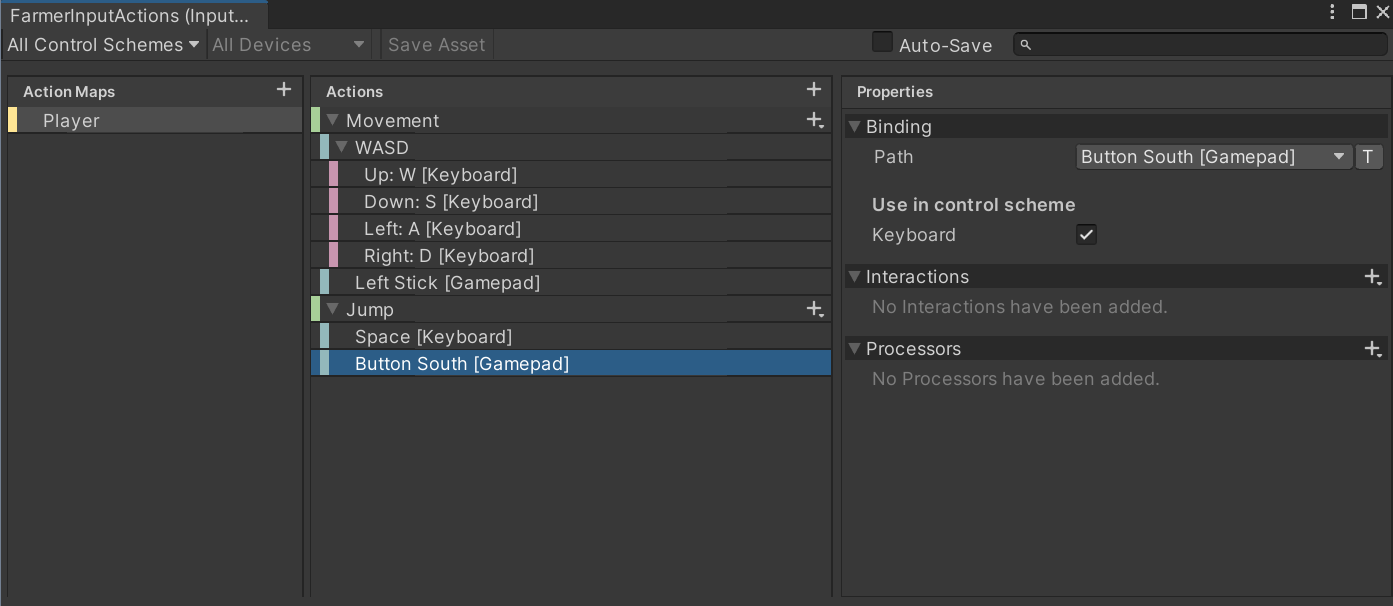

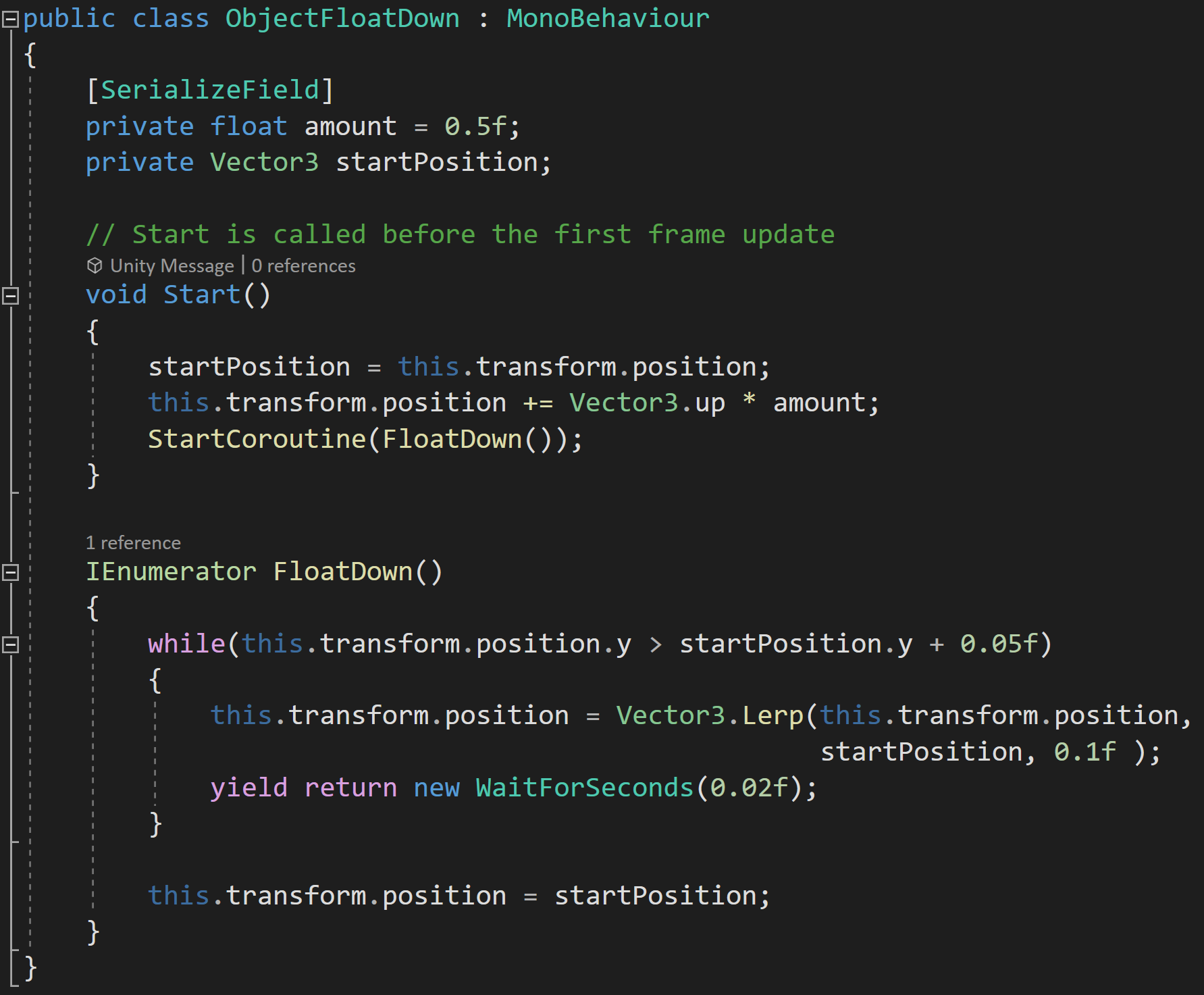

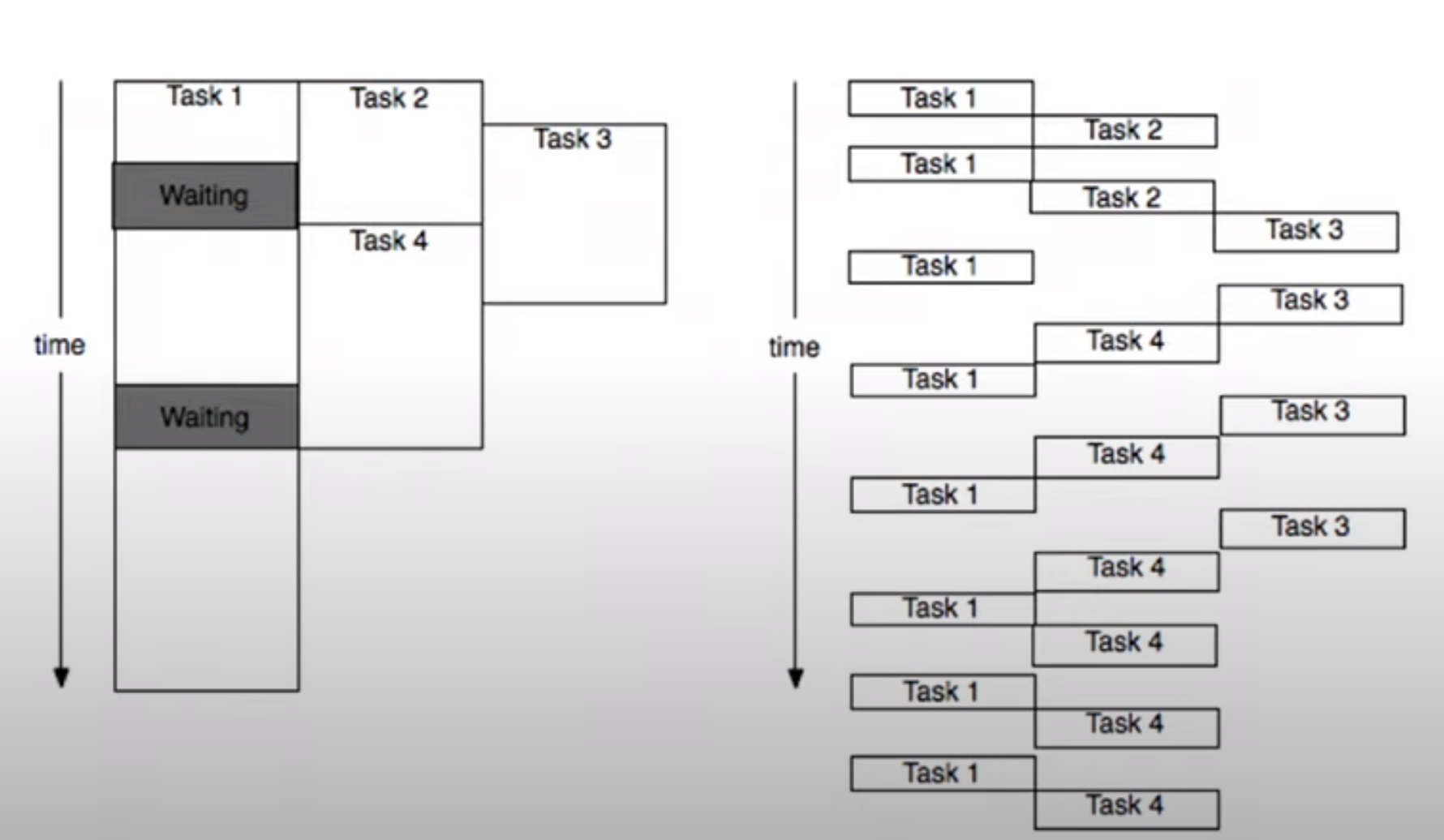

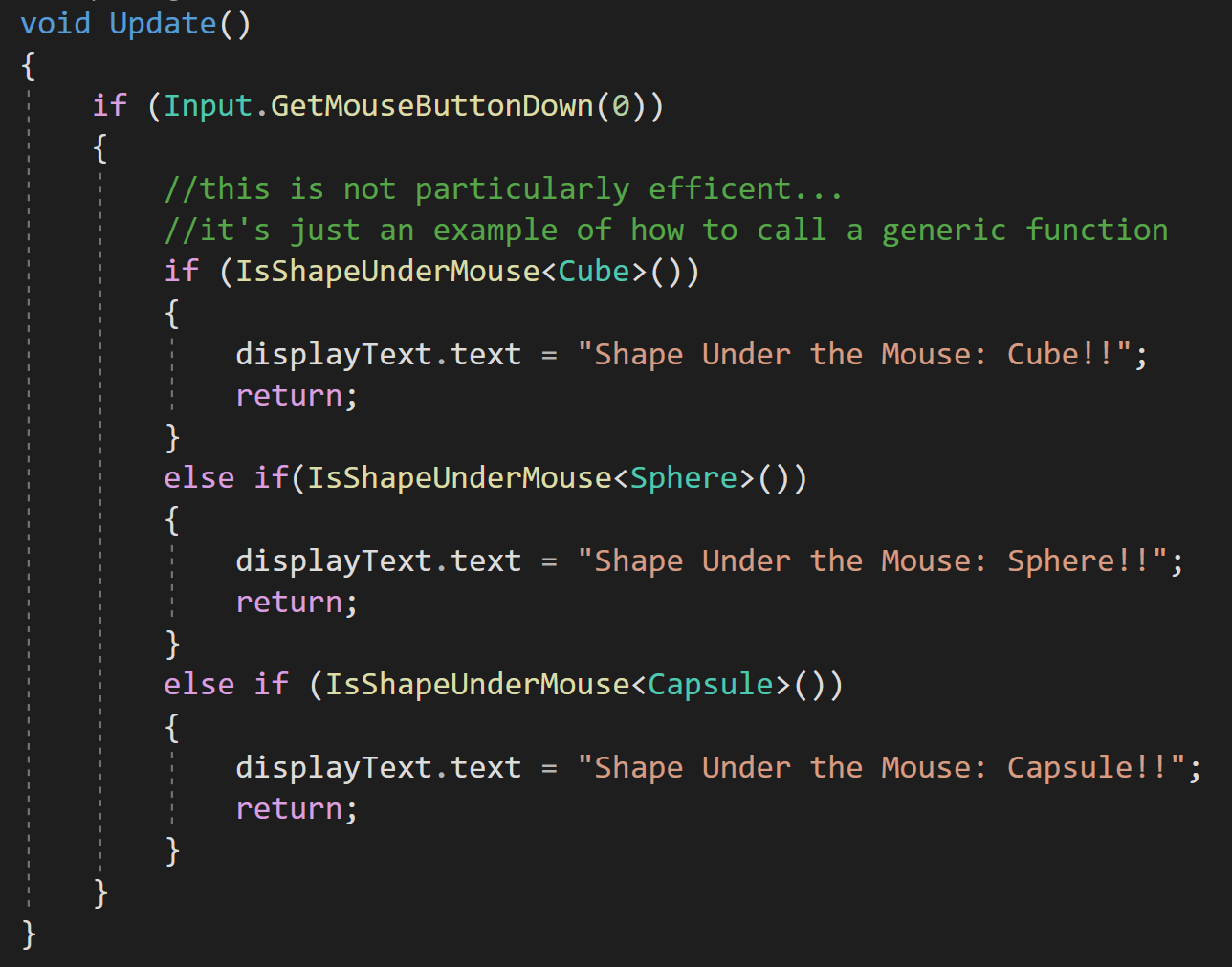

Prototype Systems - Not The Game

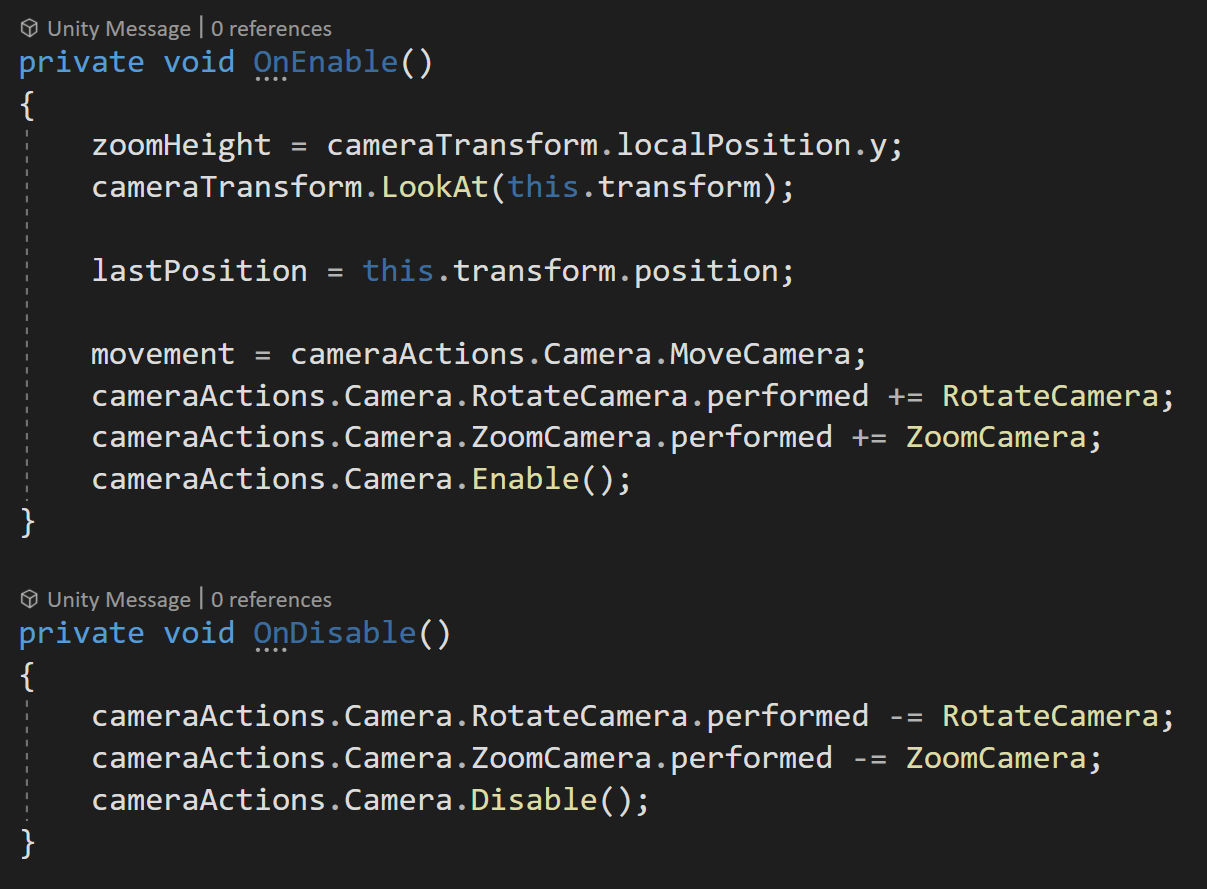

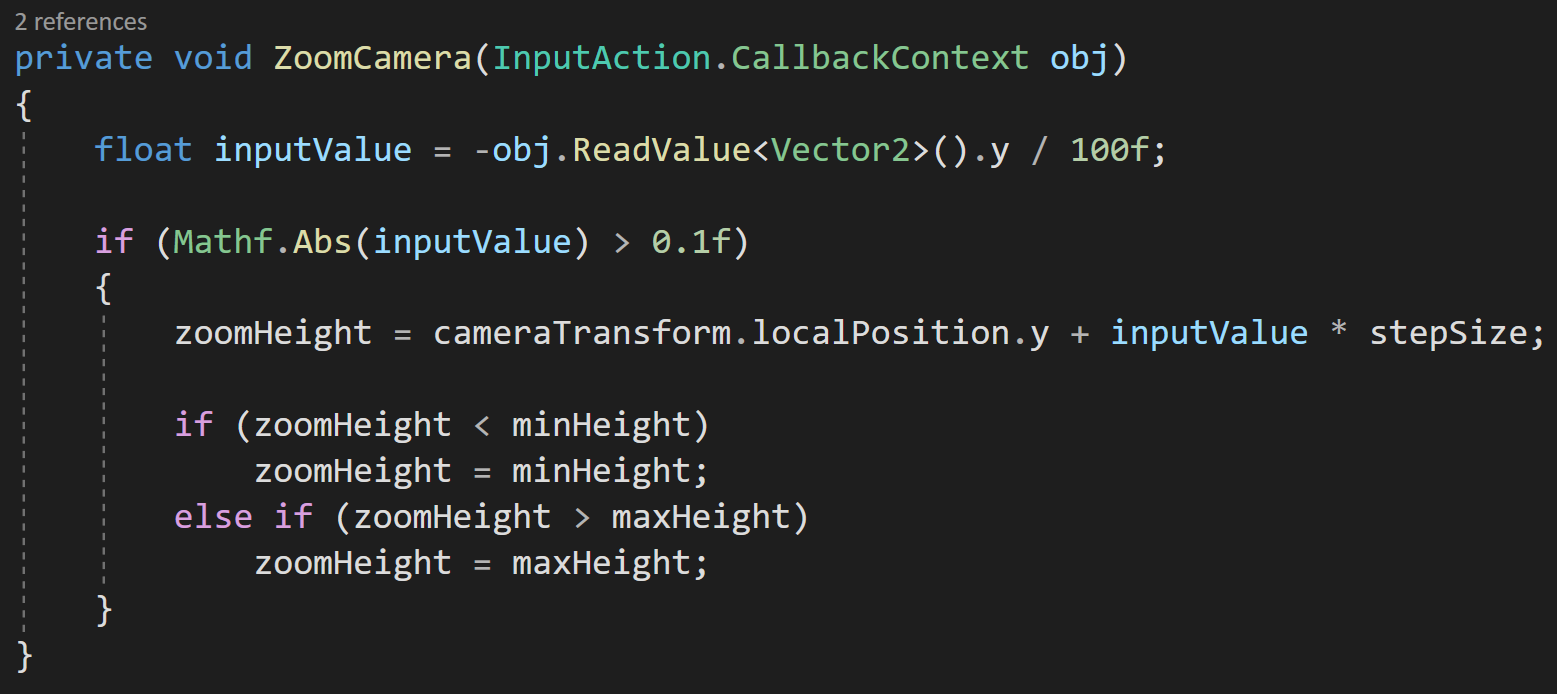

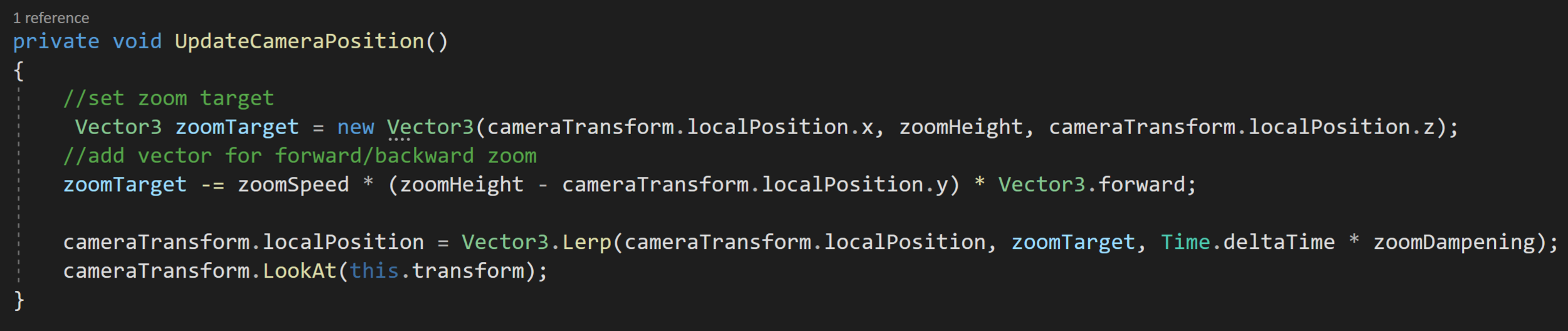

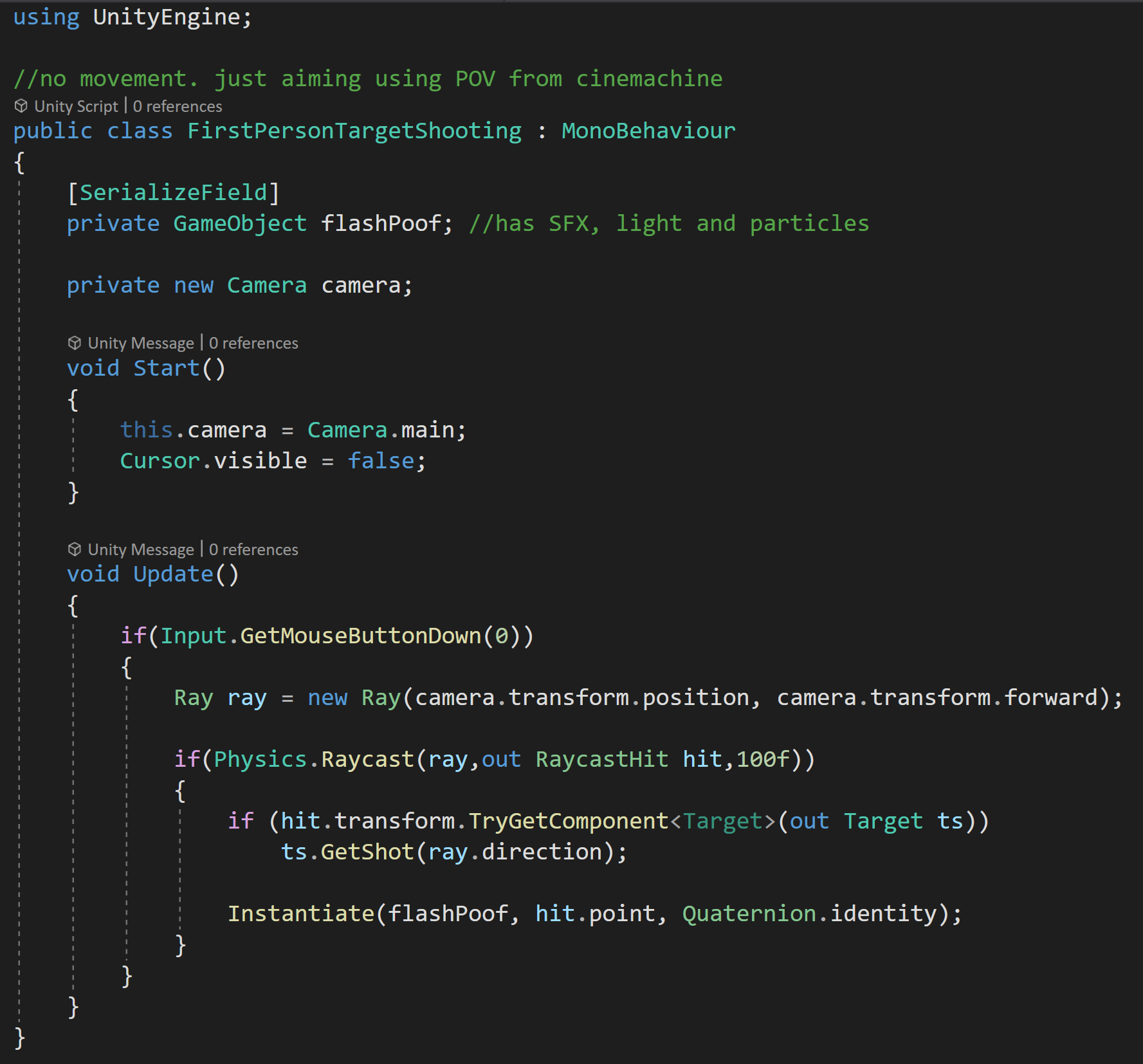

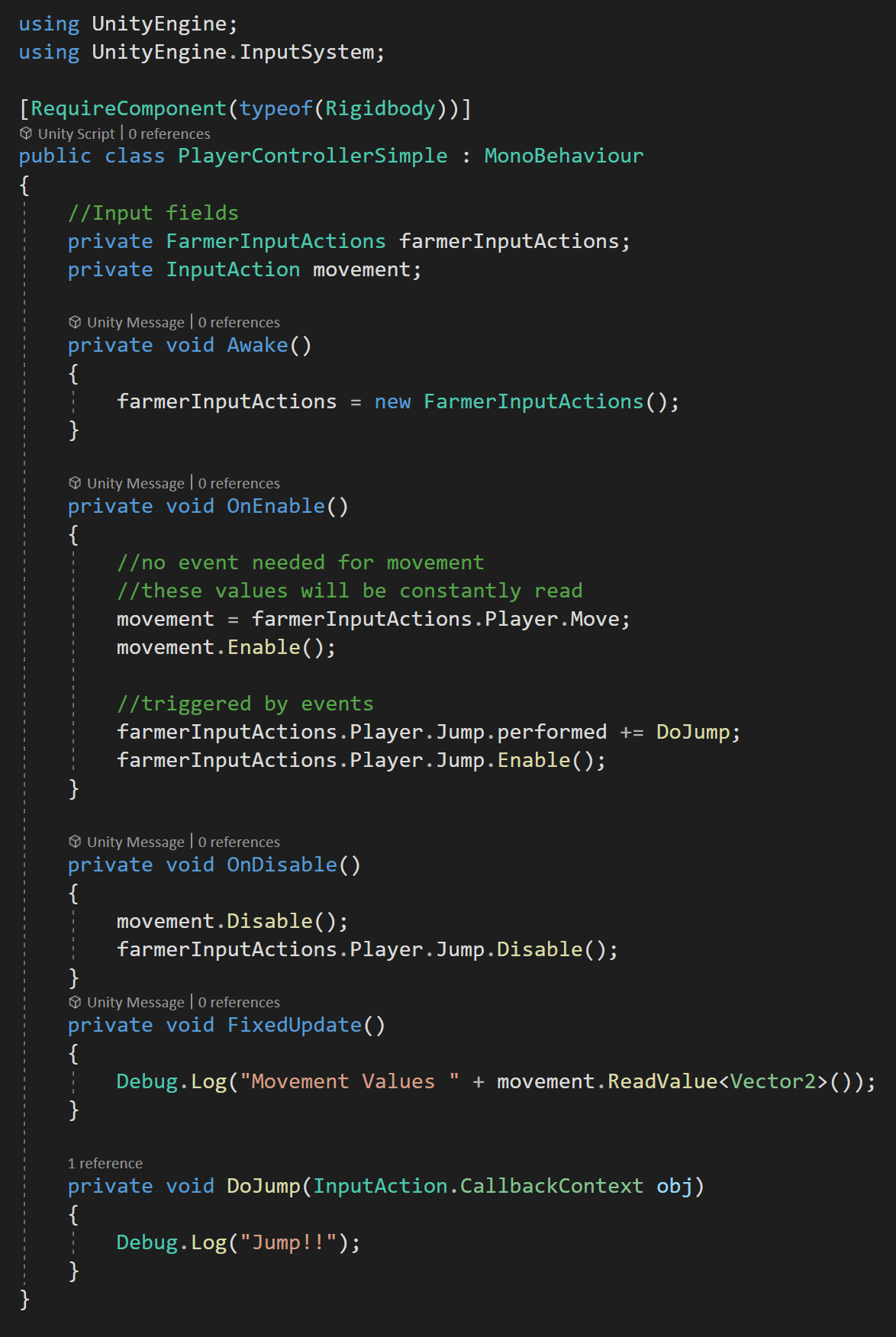

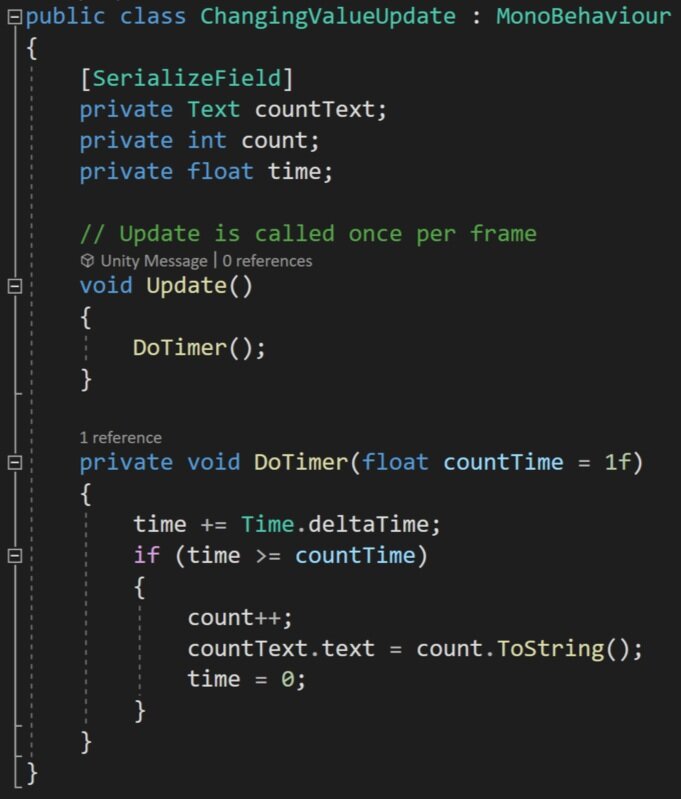

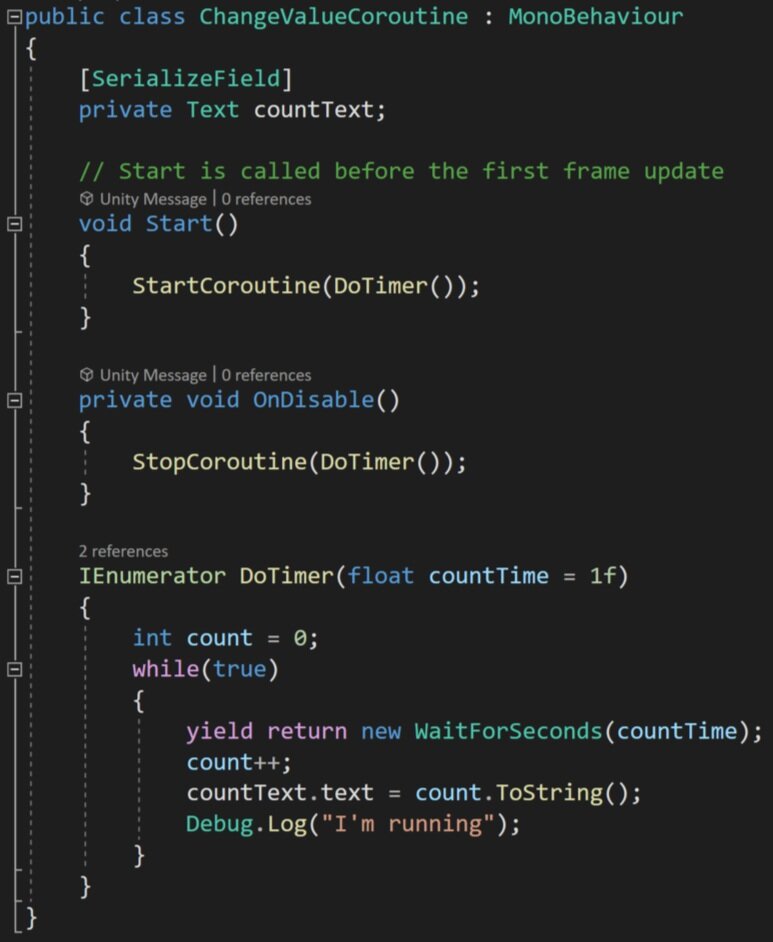

At this point in the process, I get to start scratching the itch to build. Up until now, Unity hasn’t been opened. I’ve had to fight the urge, but it’s been for the best. Until now.

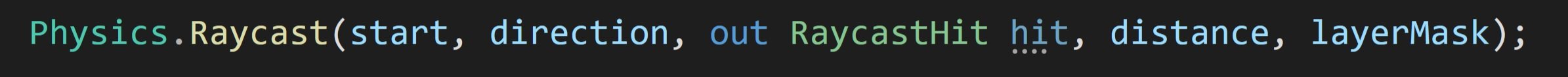

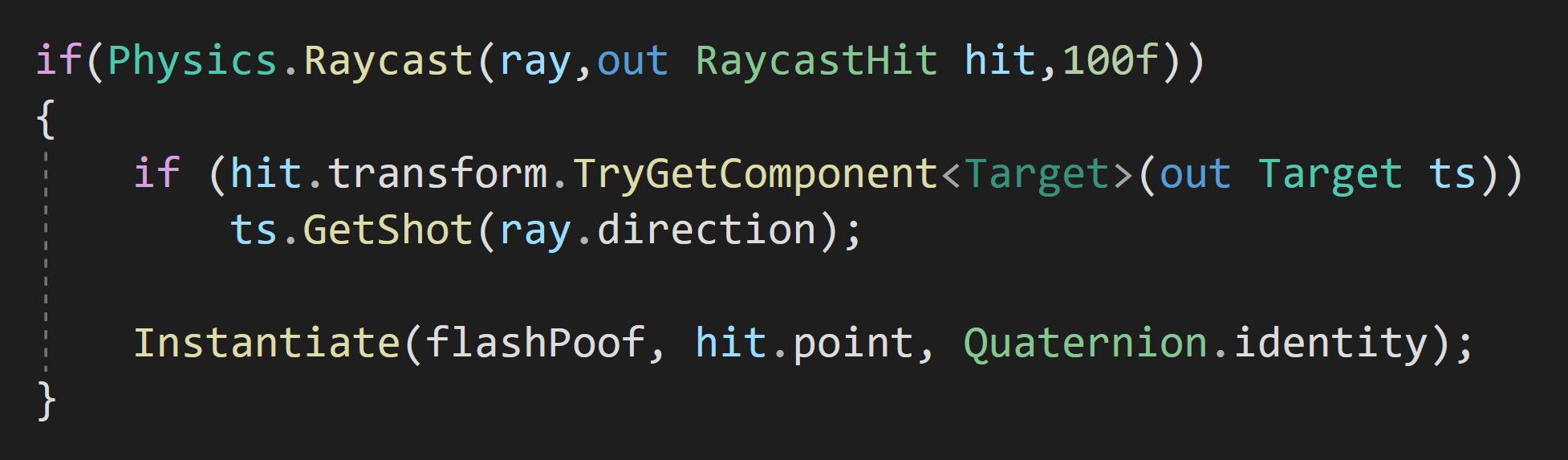

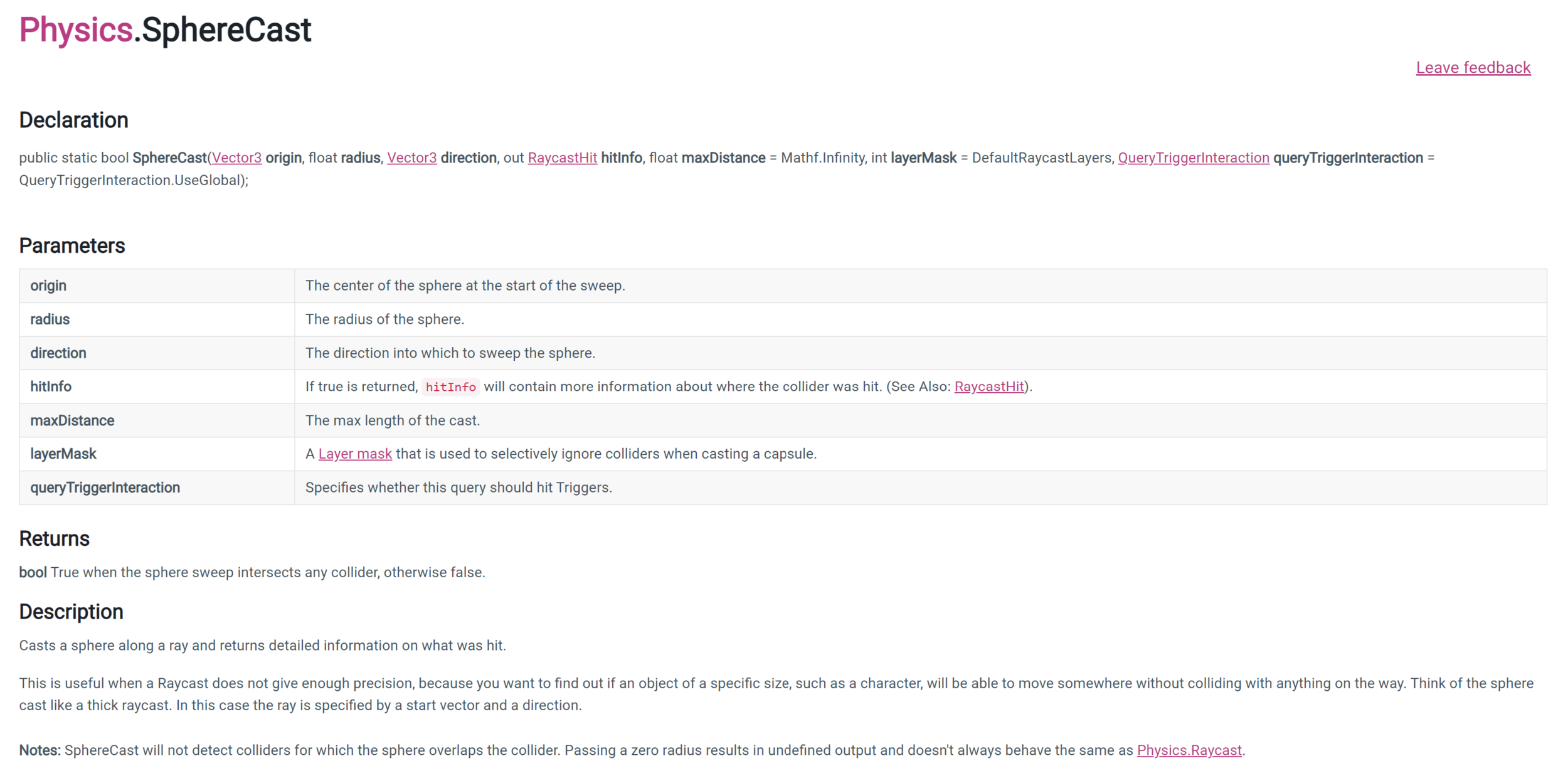

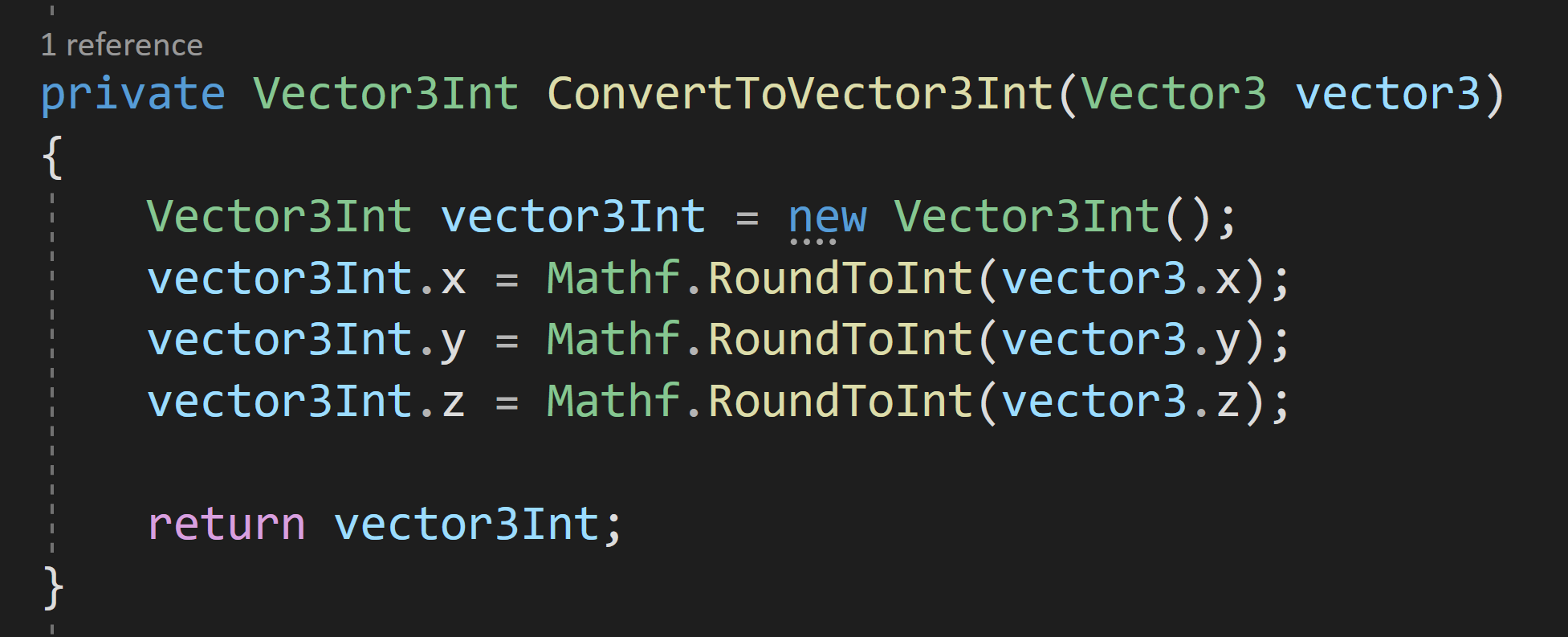

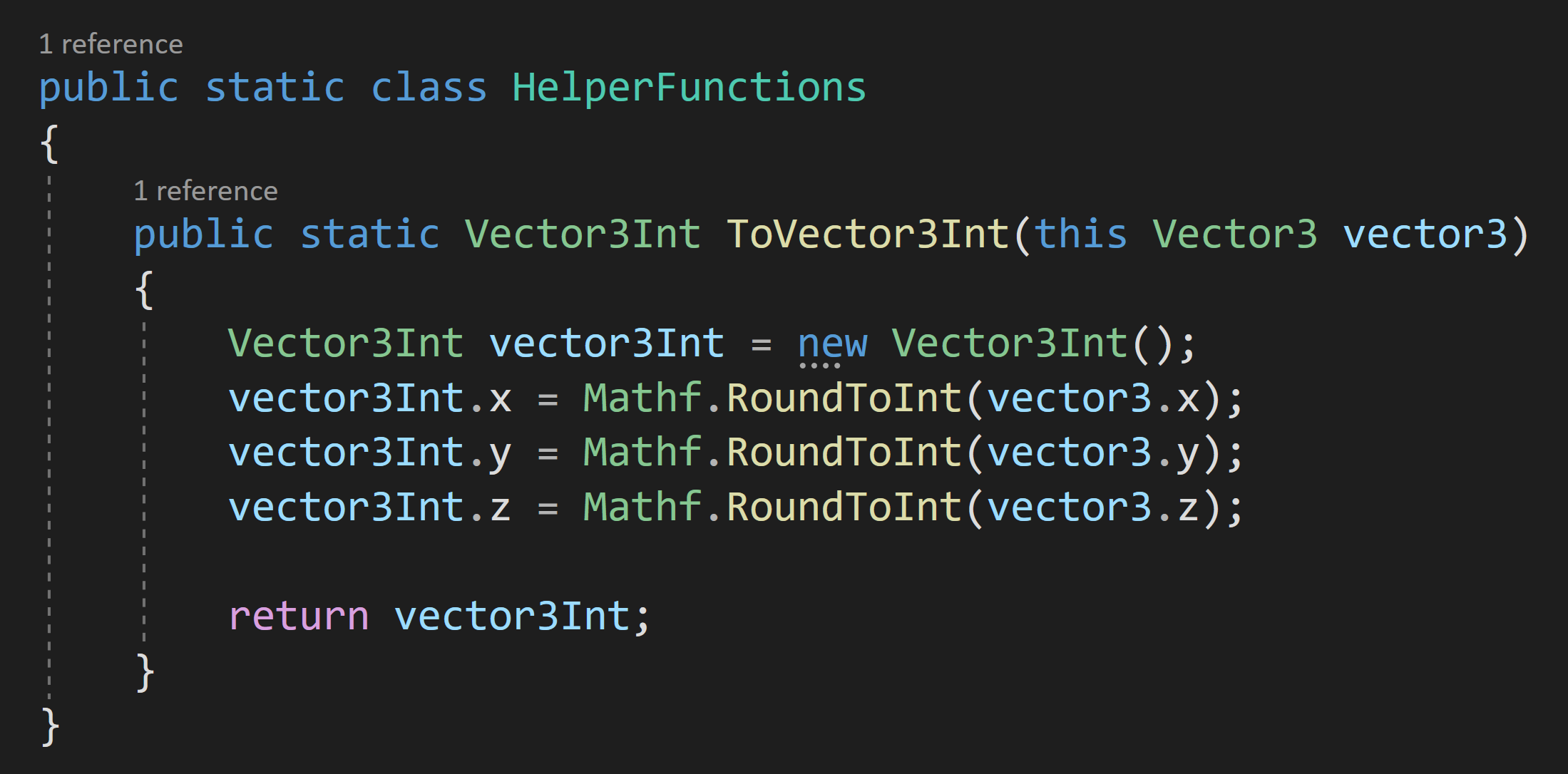

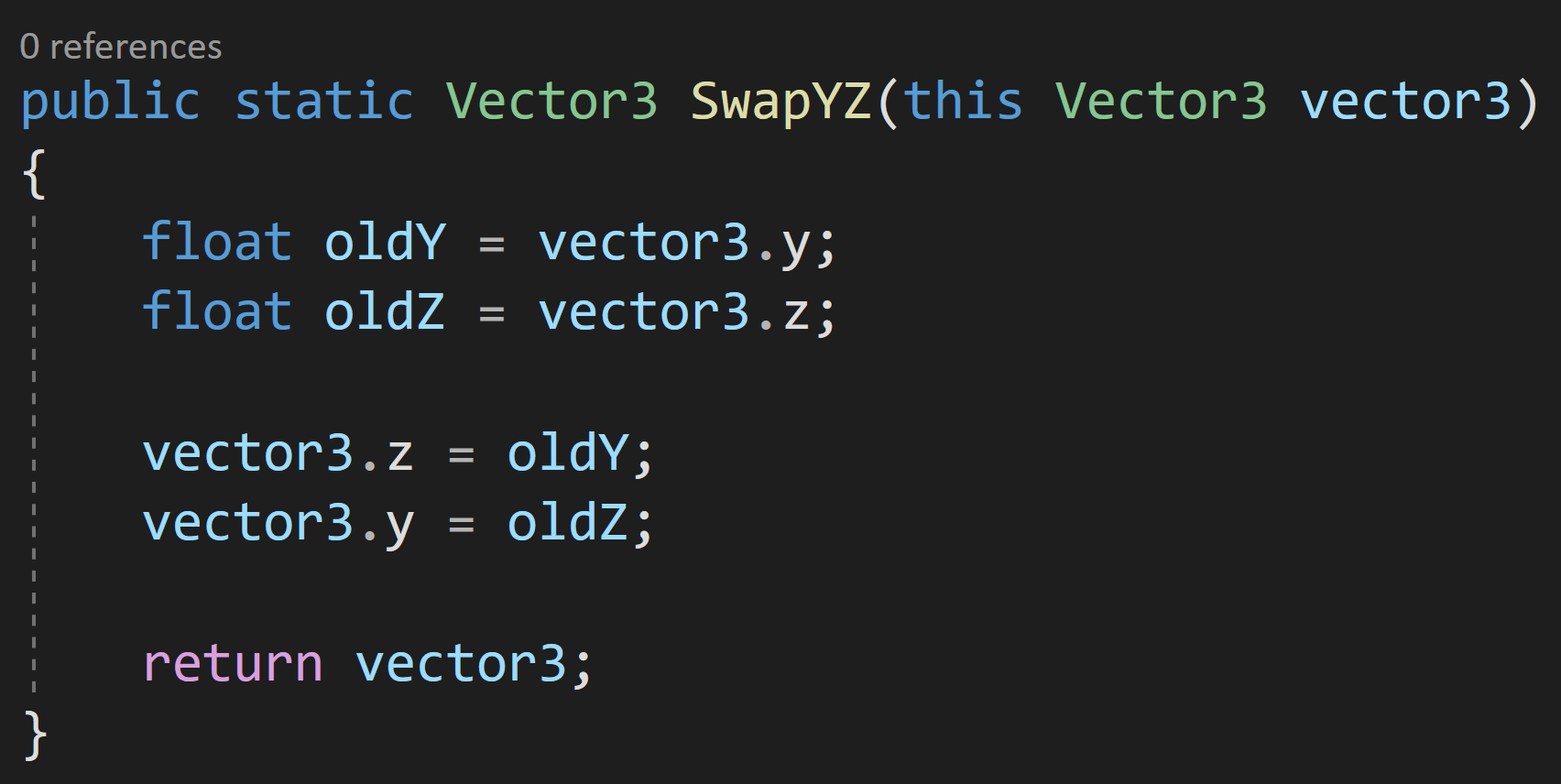

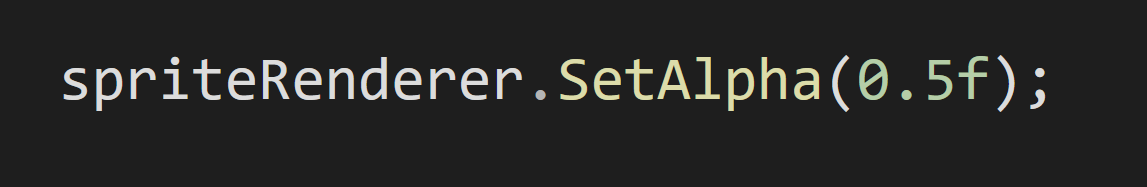

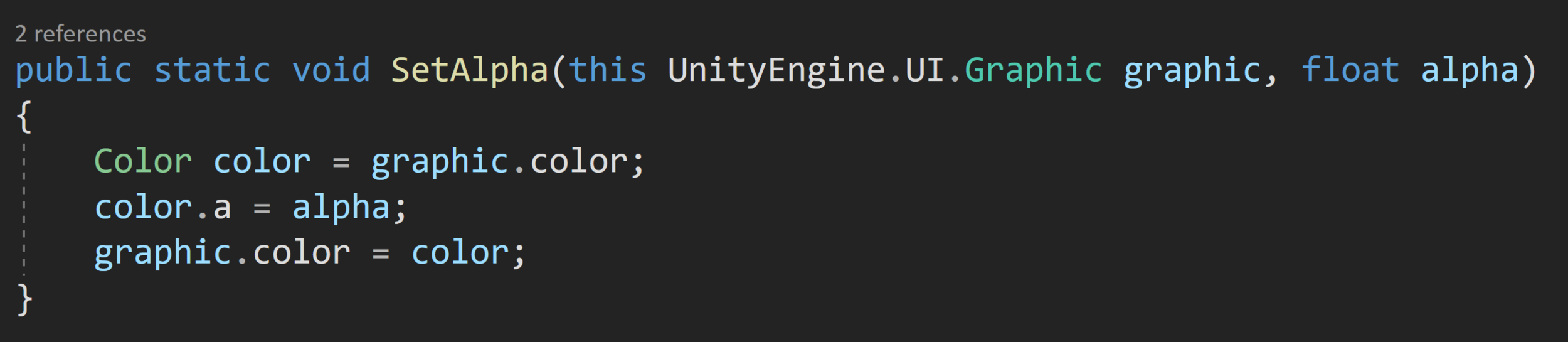

Now I get to prototype systems. Not a game or the game. Just parts of a potential game. This is when I start to explore systems that I haven’t made before or systems I don’t know how to make. I focus on parts that seem tricky or will be core to the game. I want to figure out the viability of an idea or concept.

At this stage, I dive into different research. Not playing games, but watching and reading tutorials and articles. I take notes. Lots of notes. For me, this is like going back to school. I need to learn how other people have created systems or mechanics. Why re-invent the wheel? Sometimes you need to roll your own solution, but why not at least see how other folks have done it first?

If I find a tutorial that feels too complex. I look for another. If that still feels wrong, I start to question the mechanic itself.

Maybe it’s beyond my skill set? Maybe it’s too complex for a guy doing this in his spare time? Or maybe I just need to slow down and read more carefully?

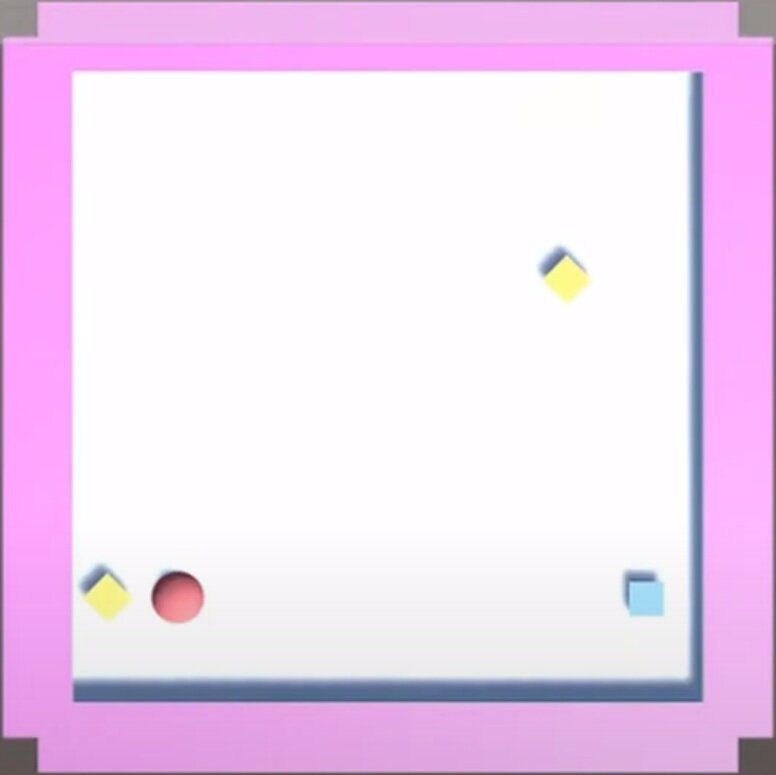

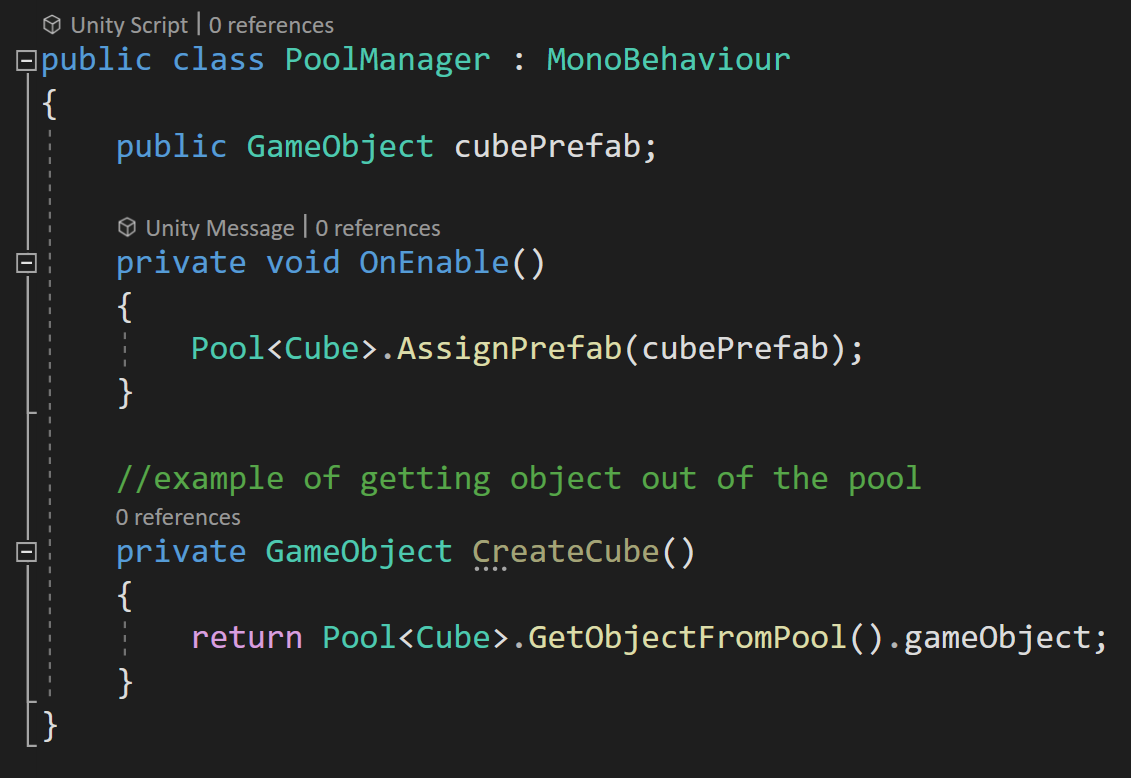

Some prototype Art for a possible Hex tile Game

Understanding and implementing a hex tile system was very much all of the above. Red Blob Games has an excellent guide to hex grids with all the math and examples of code to implement hex grids into your games. It’s not easy. Not even close. But it was fun to learn and with a healthy dose of effort, it’s understandable. (To help cement my understanding, I may do a series of videos on hex grids.)

This stage is also a chance to evaluate systems to see if they could be the basis of a game. I’ve been intrigued by ecosystems and evolution for a long while. Equilinox is a great example of a fairly recent ecosystem-based game made by a single (skilled) individual. Sebastian Lague put together an interesting video on evolution, which was inspired by the Primer videos. All of these made me want to explore the underlying mechanics.

So, I spent a day or two writing code, testing mechanics, and had some fun but ultimately decided it was too fiddly and too hard to base a game on. So I moved on, but it wasn’t a waste of time!

After each prototype is functional, but not polished, I ask myself more questions.

Does the system work? Is the system janky? What parts are missing or still need to be created? Is it too complex or hard to balance? Is there too much content to create? Or maybe it’s just crap?

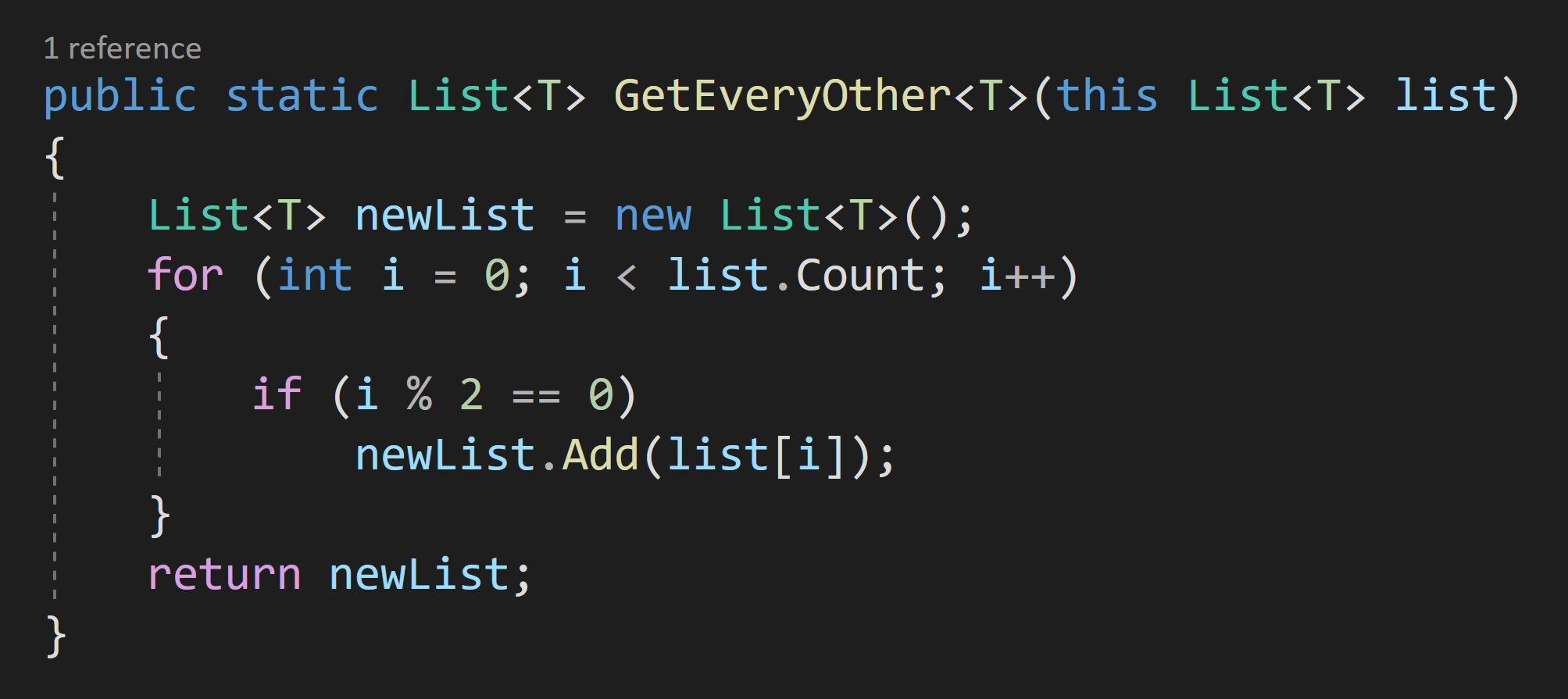

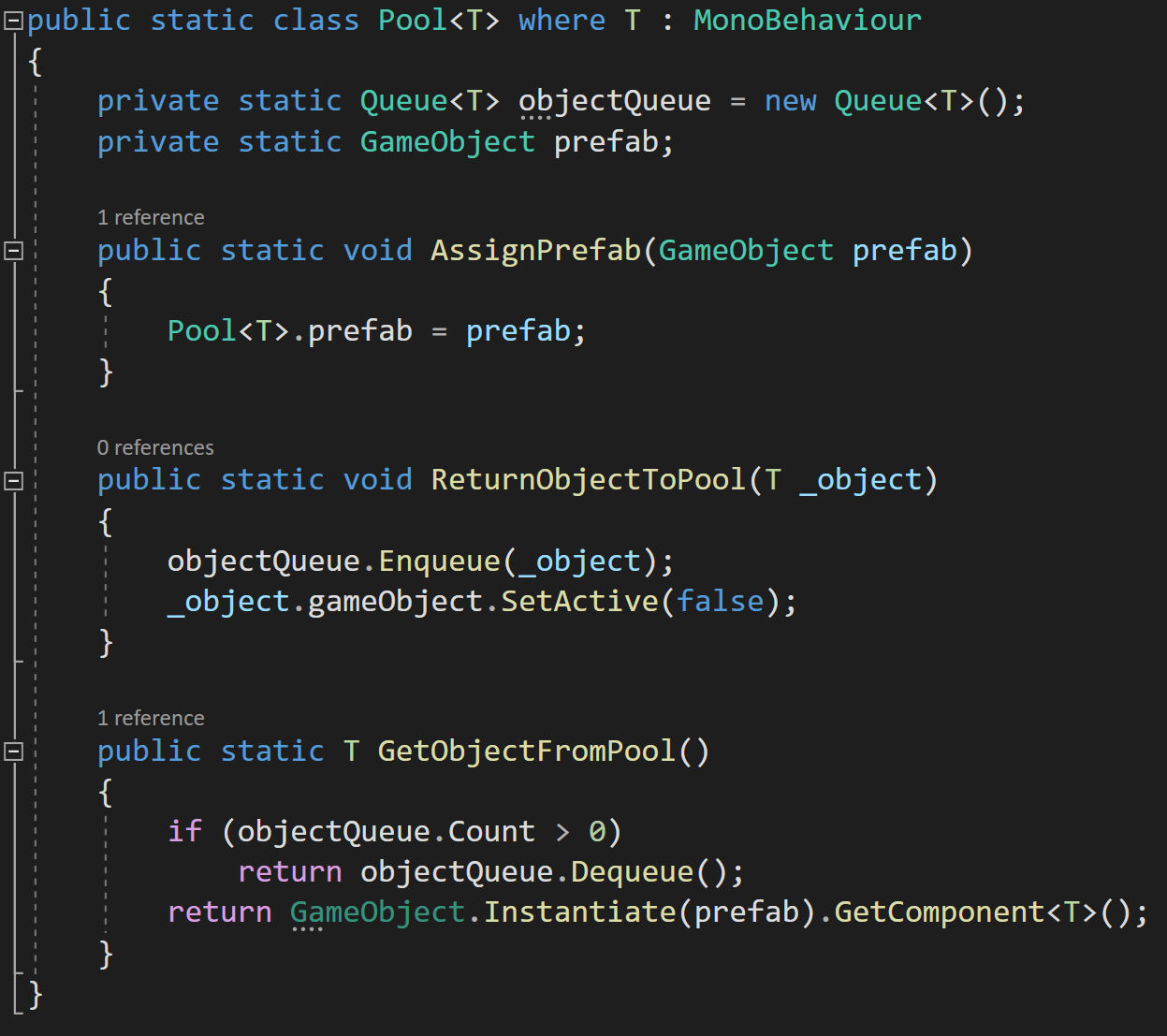

For me, it’s also important that I’m not trying to integrate different system prototypes (at this point). Not yet. I for sure want to avoid coupling and keep things encapsulated, but I also don’t want to go down a giant rabbit hole. That time may come, but it’s not now. I’m also not trying to polish the prototypes. I want the systems to work and be reasonably robust, but at this point, I don’t even know if the systems will be in a game so I don’t want to waste time.

(Pre-Planning) Let The Culling Begin!

With prototypes of systems built, it’s now time to start chopping out the fluff, the junk, and start to give some shape to a game design. And yes, I start asking more questions.

What are the major systems of the game? What systems are easy or hard to make? Are there still systems I don’t know how to make? What do I still need to learn? What will be the singular core mechanic of the game?

And here’s a crucial question!

What are the time sinks? Even if I know how to do X or Y will it take too long?

3D Models, UI, art, animations, quests, stories, multiplayer, AI…. Basically, everything is a time sink. But!

Which ones play to my strengths? Which ones help me reach my goal? Which ones can I design around or ignore completely? What time sinks can be tossed out and still have a fun game?

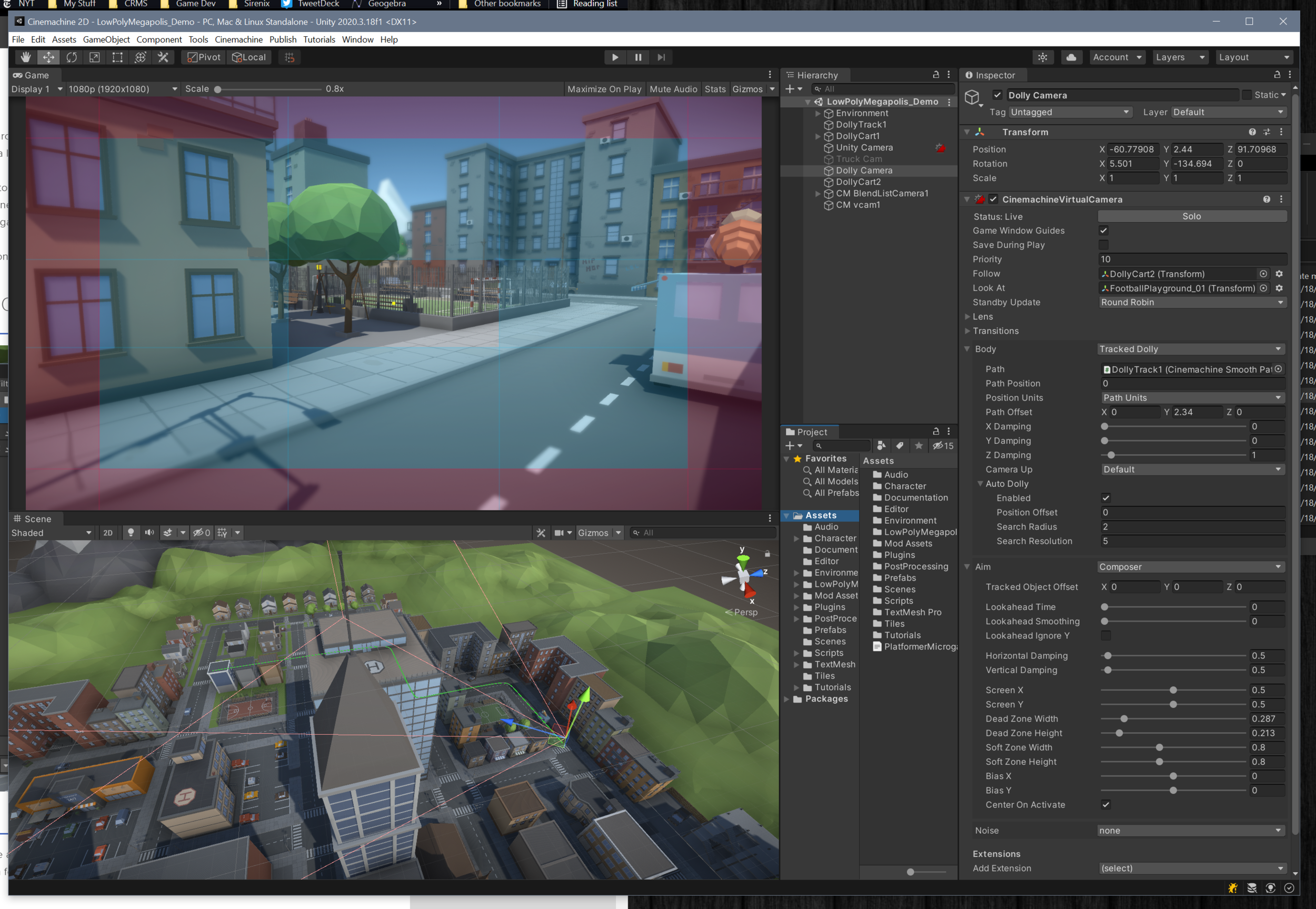

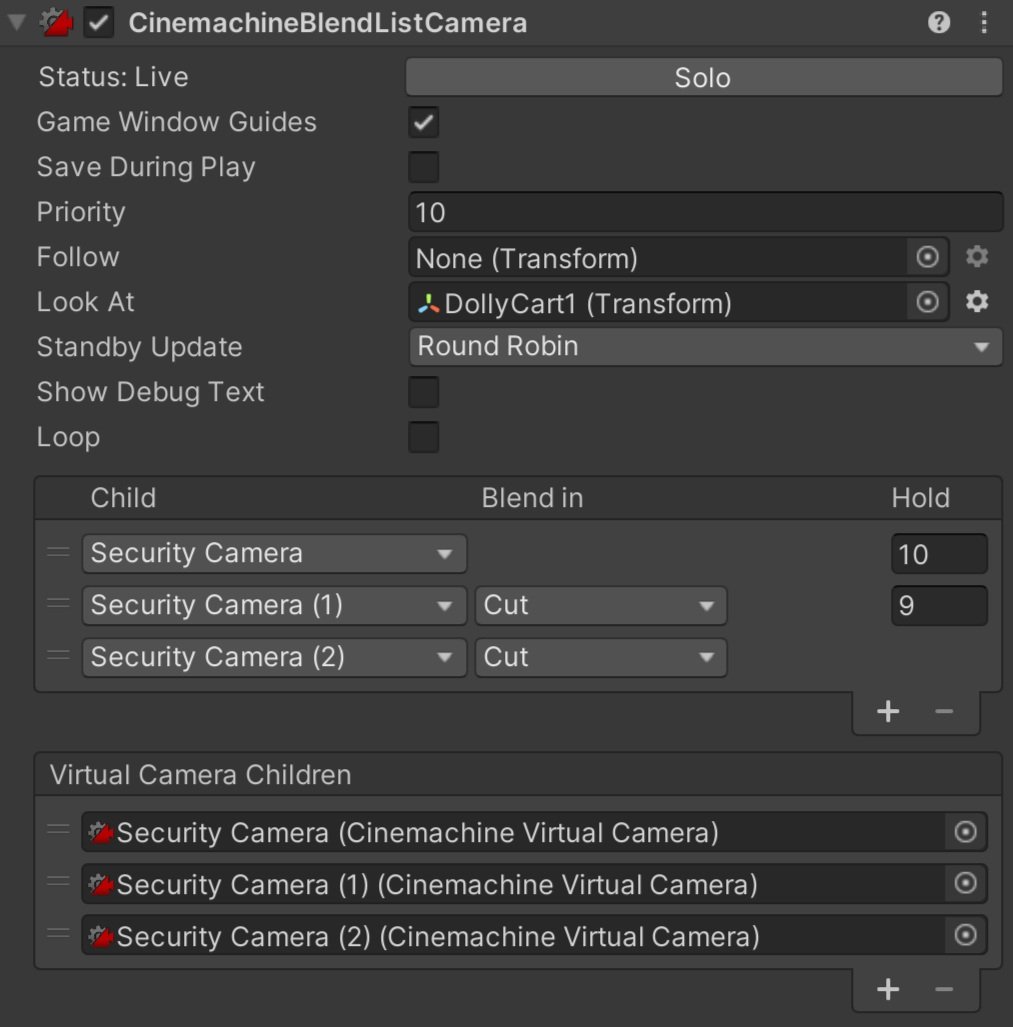

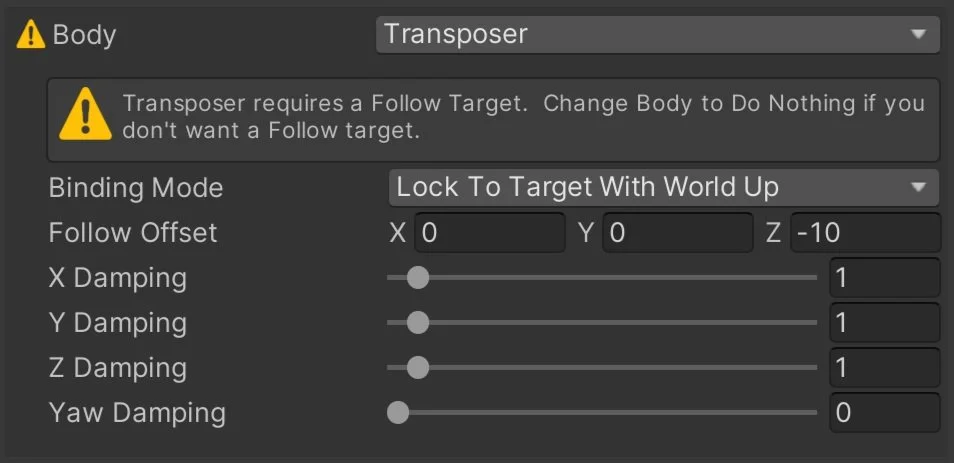

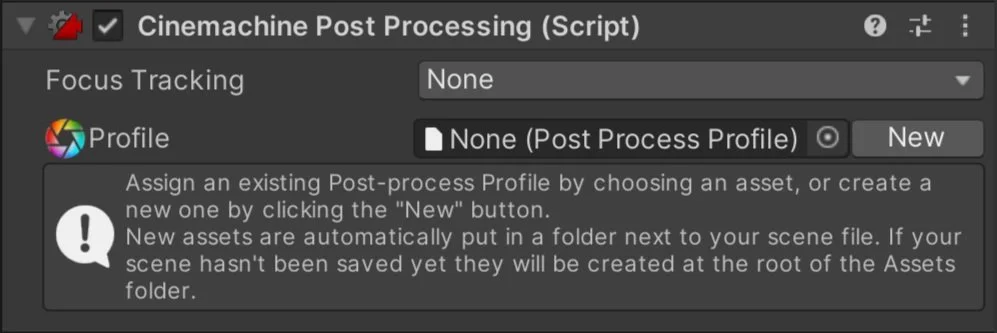

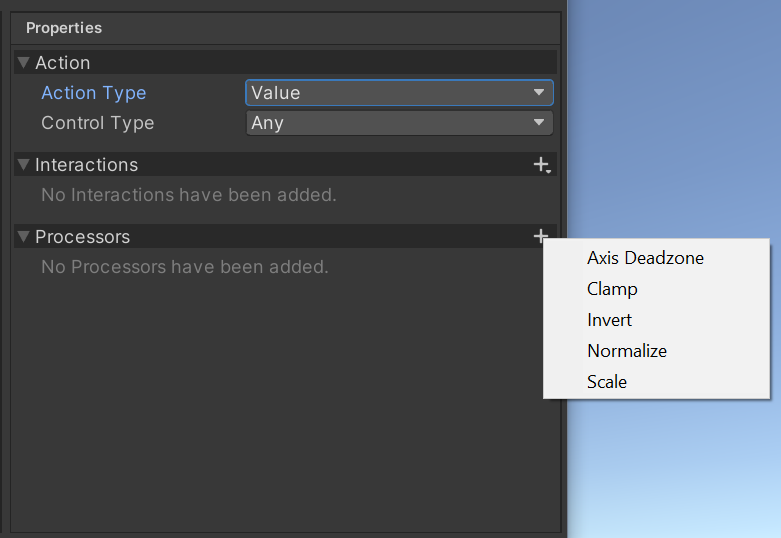

Assets I Use

When I start asking these questions it’s easy to fall into the trap of using 3rd party assets to solve my design problems or fill in my lack of knowledge. It’s easy to use too many or use the wrong ones. I need to be very picky about what I use. Doubly so with assets that are used at runtime (as opposed to editor tools). For me, assets need to work out of the box AND work independently. If my 3rd party inventory system needs to talk to my 3rd party quest system which needs to talk to my 3rd party dialogue system I am asking for trouble and I will likely find it.

The asset store is full of shiny objects and rat holes. It’s worth a lot of time to think about what you really need from the asset store.

What you can create on your own? What should you NOT create on your own? What you can design around? Do you really need X or Y?

For me, simple is almost always better. If I do use 3rd party assets, and I do, they need to be part of the prototyping stage. I read the documentation and try to answer as many questions as I can before integrating the asset into my project. If the asset can’t do what I need, then I may have to make hard decisions amount the asset, my design, or even the game as a whole.

I constantly have to remind myself that games aren’t fun because they’re complex. Or at the very least, complexity does not equal fun. What makes games fun is something far more subtle. Complexity is a rat hole. A shiny object.

Deep Breath. Pause. Think.

At this point, I have a rough sketch in my head of the game and it’s easy to get excited and jump into building with both feet. But! I need to stop. Breathe. And think.

Does the game match my goals? Can I actually make the game? Are there mechanics that should be thrown out? Can I simplify the game and still reach my goal? Is this idea truly viable?

Depending on the answers, I might need be to go back and prototype, do more research, or scrap the entire design and start with something a single guy can actually make.

This point is a tipping point. I can slow down and potentially re-design the game or spend the next 6 months discovering my mistakes. Or worse, ignoring my mistakes and wasting even more time as I stick my head in the sand and insist I can build the game. I’ve been there. I’ve done that. And it wasn’t fun.

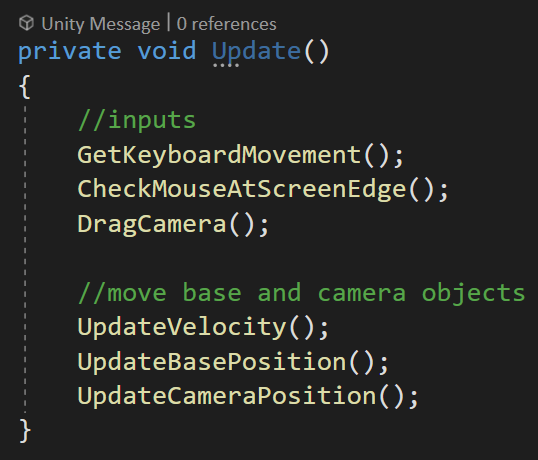

Now We Plan

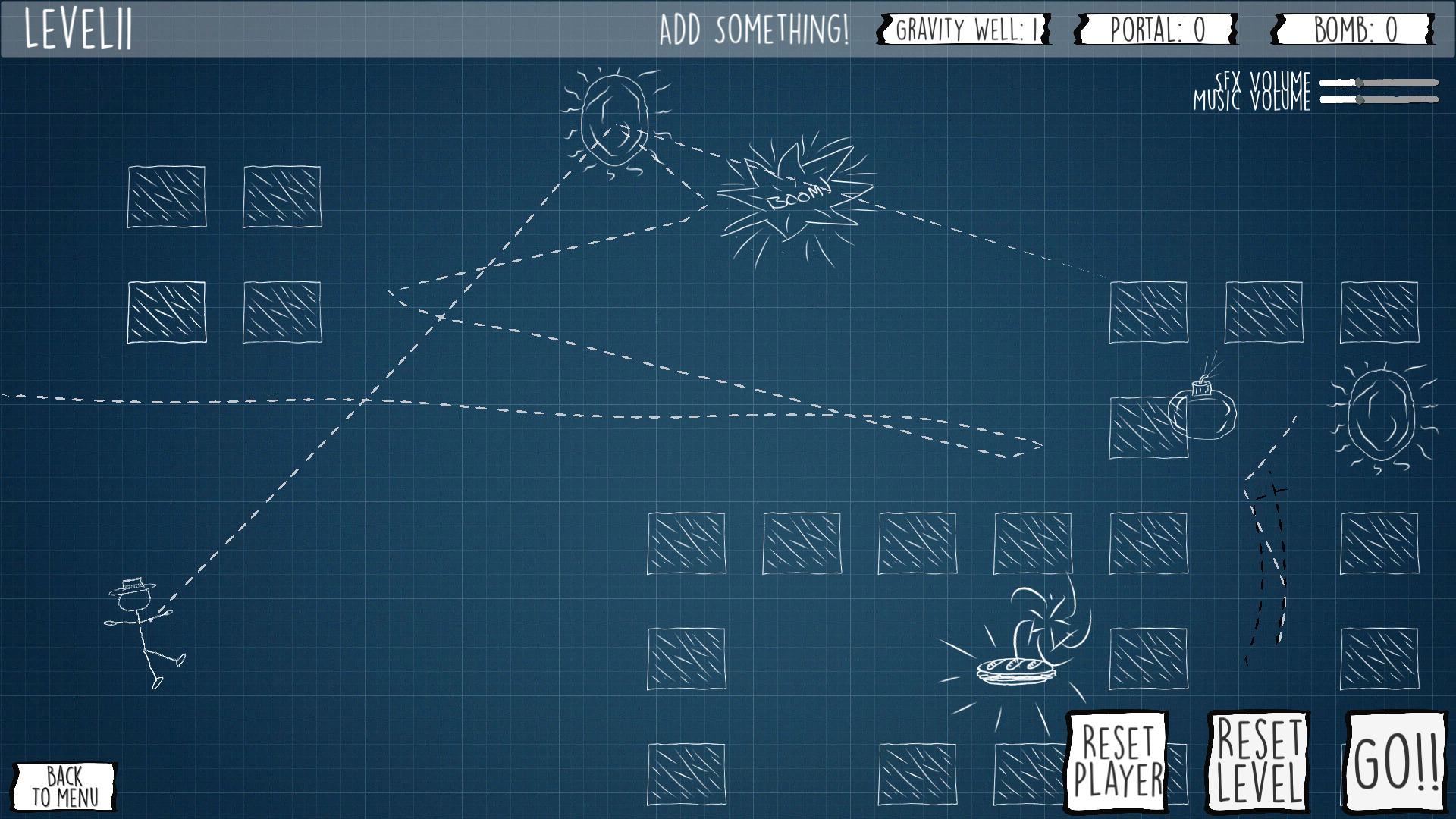

Maybe a third of the items on my to do list for Grub Gauntlet

Ha! I bet you thought I was done planning. Not even close. I haven’t even really started.

There are a lot of opinions about the best planning tool. For me, I like Notion. Others like Milanote or just a simple google doc. The tool doesn’t matters, it’s the process. So pick what works for you and don’t spend too much time trying to find the “best” tool. There’s a poop ton of work to do, don’t waste time.

Finding the right level of detail in planning is tough and definitely not a waste of time. I’m not creating some 100+ page Game Design Document. Rather I think of what I'm creating as a to-do list. Big tasks. Small tasks. Medium tasks. I want to plan out all the major systems, all the art, and all the content. This is my chance to think through the game as a whole before sinking 100’s or likely 1000’s of hours into the project.

To some extent, the resulting document forms a contract with myself and helps prevent feature creep. The plan also helps when I’m tired or don’t know what to do next. I can pull up my list and tackle something small or something interesting.

Somewhere in the planning process, I need to decide on a theme or skin for the game. The naming of classes or objects may depend on the theme AND more importantly, some of the mechanics may be easier or harder to implement depending on the theme. For example, Creeper World 4’s flying tanks totally work in the sci-fi-themed world. Not so much if they were flying catapults or swordsmen in a fantasy world. Need to resupply units? Creeper World sends the resources over power lines. Again, way easier than an animated 3D model of a worker using a navigation system to run from point A to point B and back again.

Does the theme match the mechanics? Does it match my skillset? Can I make that style of art? Does the theme help reach the goal? Does the theme simplify mechanics or make them more complex?

Minimum Viable Product (MVP)

Upgrade that

Knowlegde

Finally! Now I get to start building the project structure, writing code, and bringing in some art. But! I’m still not building the game. I’m still testing. I want to get something playable as fast as possible. I need to answer the questions:

Is the game fun? Have I over-scoped the game? Can I actually build it with my current skills and available time?

If I spent 3 months working on an inventory system and all I can do is collect bits on a terrain and sell them to a store. I’ve over-scoped the game. If the game is tedious and not fun then I either need to scrap the game or dig deeper into the design and try to fix it. If the game breaks every time I add something or change a system then I need to rethink the architecture or maybe the scope of the game or upgrade my programming knowledge and skill set.

If I can create the MVP in less than a month and it’s fun then I’m on to something good!

Why so short a time frame? My last project, Grub Gauntlet was created during a 48-hour game jam. I spent roughly 20 hours during that time to essentially create an MVP. It then took another 10 months to release! I figure the MVP is somewhere around 1/10th or 1/20th of the total build time.

It’s way better to lose 1-2 months building, testing, and then decide to scrap the project than to spend 1-2 years building a pile of crap. Or worse! Spend years working only to give up without a finished product.

Can I Build It Now?

This is the part we’re all excited about. Now I get to build, polish, and finish a game. There’s no secret sauce. This part is the hardest. It’s the longest. It’s the most discouraging. It’s also the most rewarding.

If I’ve done my work ahead of time then I should be able to finish my project. And that? That is an amazing feeling!